Category: Clinical Trials

Vitamin D supplements do not reduce the risk of depression

A newly-published randomized controlled trial finds vitamin D supplementation has no effect on depression. This adds to the long list of medical conditions for which vitamin D supplementation has turned out to be ineffective.

Hydroxychloroquine to treat COVID-19: Evidence can’t seem to kill it

Despite the accumulating negative evidence showing that hydroxychloroquine doesn't work against COVID-19, activists continue to promote it as a way out of the pandemic. This week, the AAPS and a Yale epidemiologist joined the fray with embarrassingly bad arguments.

Another Broken Meditation Study

Another study shows meditation does not work, but the authors manage to conclude that it does.

Probiotics, revisited

New guidelines do not recommend probiotics for most gastrointestinal conditions.

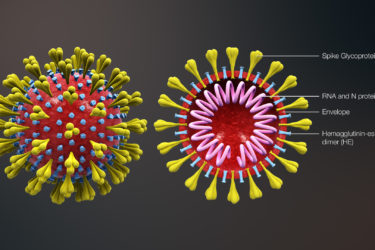

Is COVID-19 transmitted by airborne aerosols?

A recurring debate about COVID-19 bubbled up late last week, when a group of scientists announced an as-yet unpublished open letter to the World Health Organization arguing that COVID-19 transmission is airborne and urging it to change its recommendations. What is this debate about, and, if coronavirus is airborne, should we be more scared?

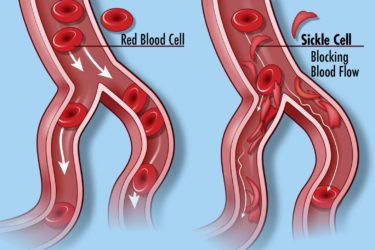

New Drugs for Sickle Cell Disease: Small Benefit, Large Price

The FDA has approved two new drugs to treat sickle cell disease. They don’t do much, and they are prohibitively expensive.

COVID-19: Out-of-control science and bypassing science-based medicine

During the COVID-19 pandemic, there hasn't just been a pandemic of coronavirus-caused disease. There's also a pandemic of misinformation and bad science. It turns out that doctors today are just as prone as doctors 100 years ago during the 1918-19 influenza pandemic to bypass science-based medicine in their desperation to treat patients.

Hydroxychloroquine and the price of abandoning of science- and evidence-based medicine

Based on anecdotal evidence early in the pandemic and then-unreported clinical trials, followed by hype and bad studies by French "brave maverick doctor" Didier Raoult, antimalarial drugs hydroxychloroquine and chloroquine became the de facto standard of care for COVID-19, despite no rigorous evidence that they worked. A steady drip-drip-drip of negative studies has led doctors and health authorities to rethink and backtrack,...

“Miracle cure” testimonials aside, azithromycin and hydroxychloroquine probably do not work against COVID-19

Here we go again. Didier Raoult has published another uninformative study looking at the use of hydroxychloroquine and azithromycin to treat COVID-19. Unfortunately, recent data examining these drugs have been trending in the direction of the conclusion that these drugs probably don't work against COVID-19 but do cause harm. Sadly, the lack of evidence hasn't stopped the hucksters from promoting hydroxychloroquine as...

Do face masks decrease the risk of COVID-19 transmission?

As wearing masks to prevent the spread of COVID-19 becomes a culture war issue, the evidence for whether they prevent transmission of COVID-19 remains contested. A new systematic review and meta-analysis provides the best evidence yet that social distancing and masks are highly effective at decreasing the risk of contracting coronavirus, while eye protection might also help, but it's not a slam...