The last couple of weeks, I’ve made allusions to the “Bat Signal” (or, as I called it, the “Cancer Signal,” although that’s a horrible name and I need to think of a better one). Basically, when the Bat Cancer Signal goes up (hey, I like that one better, but do bats get cancer?), it means that a study or story has hit the press that demands my attention. It happened again just last week, when stories started hitting the press hot and heavy about a new study of mammography, stories with titles like Vast Study Casts Doubts on Value of Mammograms and Do Mammograms Save Lives? ‘Hardly,’ a New Study Finds, but I had a dilemma. The reason is that the stories about this new study hit the press largely last Tuesday and Wednesday, the study having apparently been released “in the wild” Monday night. People were e-mailing me and Tweeting at me the study and asking if I was going to blog it. Even Harriet Hall wanted to know if I was going to cover it. (And you know we all have a damned hard time denying such a request when Harriet makes it.) Even worse, the PR person at my cancer center was sending out frantic e-mails to breast cancer clinicians because the press had been calling her and wanted expert comment. Yikes!

What to do? What to do? My turn to blog here wasn’t for five more days, and, although I have in the past occasionally jumped my turn and posted on a day not my own, I hate to draw attention from one of our other fine bloggers unless it’s something really critical. Yet, in the blogosphere, stories like this have a short half-life. I could have written something up and posted it on my not-so-secret other blog (NSSOB, for you newbies), but I like to save studies like this to appear either first here or, at worst, concurrently with a crosspost at my NSSOB. (Guess what’s happening today?) So that’s what I ended up doing, and in a way I’m glad I did. The reason is that it gave me time to cogitate and wait for reactions. True, it’s at the risk of the study fading from the public consciousness, as it had already begun to do by Friday, but such is life.

Mammograms don’t save lives, quoth the BMJ (and everyone covering the study)!

After my obligatory navel-gazing explanatory introduction that infuriates some and entertains others, let’s jump into the study itself. It was published in the BMJ and is, as the title tells us, the Twenty five year follow-up for breast cancer incidence and mortality of the Canadian National Breast Screening Study: randomised screening trial. Before we delve into the findings, I should take a moment to explain what the Canadian National Breast Screening Study (CNBSS) actually is. The first thing you need to know is that this study has been contentious since its very beginning. In particular, radiologists have been very critical of the study. One radiologist in particular, whom we’ve encountered before, pops up time and time again in articles critical of the CNBSS. This doesn’t mean that his criticisms of the study are invalid, but this particular radiologist sends up a red flag given his track record of some truly badly thought-out criticisms he’s leveled at other mammography studies, most notably about a year ago.

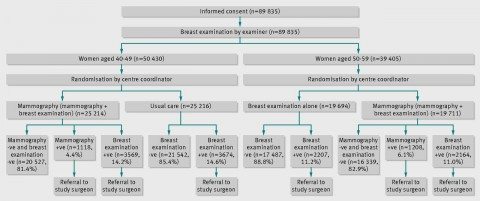

The CNBSS, conceived in the late 1970s and begun in 1980, was a randomized clinical trial that was designed to answer two questions, depending upon the age group: (1) to compare regular breast examination to breast examination plus screening mammography (age 50-59) and (2) compare screening mammography plus “usual care” (age 40-49). These were questions that had arisen from the only existing large study published at the time, the New York Health Insurance Plan (HIP) Study, which in 1963 had randomized (without informed consent) women between the ages of 40 and 64 such that around 30,000 received annual two-view mammography and clinical breast examination for three screens, with another 30,000 serving as controls who received “usual care” (i.e., clinical breast examination). The results, first published in 1977, indicated a statistically significant reduction in breast cancer mortality of 23%. However, no benefit was seen in the 40-49 year old age group. Also, over an eight-year period after diagnosis, breast cancer cases that were positive only on mammography when screened had a case fatality rate of 14%, compared to 32% for cases positive only in the clinical examination and 41% for cases positive on both modalities. The thought at the time was that the reason no difference was seen in younger women was because the incidence of breast cancer is so much lower in women aged 40-49 than it is in women aged 50-64. As I’ve discussed many times before, the less common a disease is in a population being screened, the more false positives there will be and the harder it will be to detect a decline in mortality from that disease due screening because the smaller (on an absolute basis) any observed decline will be. That’s almost certainly why the early mammography studies that led to the implementation of widespread mammographic screening programs for the most part were unable to demonstrate a benefit in terms of preventing death from breast cancer in women under 50.

In any case, the HIP Study had raised the question of what the incremental benefit of screening mammography was over “usual care,” which included, in most cases, regular visits to one’s primary care doctor and breast self-examination. This was described in the introduction to the study reported last week, thusly:

In 1980 a randomised controlled trial of screening mammography and physical examination of breasts in 89,835 women, aged 40 to 59, was initiated in Canada, the Canadian National Breast Screening Study.4 5 6 7 It was designed to tackle research questions that arose from a review of mammography screening in Canada8 and the report by the working group to review the US Breast Cancer Detection and Demonstration projects.9 At that time the only breast screening trial that had reported results was that conducted within the Health Insurance Plan of Greater New York.10 11 Benefit from combined mammography and breast physical examination screening was found in women aged 50-64, but not in women aged 40-49. Therefore the Canadian National Breast Screening Study was designed to evaluate the benefit of screening women aged 40-49 compared with usual care and the risk benefit of adding mammography to breast physical examination in women aged 50-59. It was not deemed ethical to include a no screening arm for women aged 50-59.

So basically, there were two parts to this study: Mammographic screening plus regular clinical breast examination versus usual care in women aged 40-49 and mammographic screening plus regular clinical breast examination plus regular clinical breast examination alone in women aged 50-59. Here’s the study schema:

Women with any abnormal findings, be it on physical examination or mammography, were referred to a special review clinic directed by the surgeon affiliated with the study center, where, if indicated, diagnostic mammography was performed. (This study was carried out at 15 screening centres in six Canadian provinces, located in teaching hospitals or in cancer centers). I deem it important right here to emphasize yet again that all of these mammography studies were carried out in asymptomatic women (i.e., women who didn’t have any symptoms or lumps in their breasts). The reason I consider it important is that screening and diagnostic mammography are frequently confused in the minds of the lay public, and there is no controversy about what a woman who detects a lump in her breast or whose doctor detects one should do: Get it checked out with diagnostic mammography and (often) ultrasound, sometimes complemented with MRI. That’s the difference between diagnostic and screening mammography. Diagnostic mammography is done with the intention of working up an abnormality found on physical examination or screening mammography to determine if it needs to be biopsied. Pontification thus ended, I now point out that women who needed biopsies got them done by a surgeon to whom their primary care doctor referred them, and women who were thus diagnosed with cancer underwent treatment by surgeons and oncologists chosen by their primary care doctor.

Study subjects who enrolled had a physical examination (clinical breast exam) and were taught breast self-examination by trained nurses. Then they were randomized according to the schema above as described in the protocol:

Irrespective of the findings on physical examination, women aged 40-49 were independently and blindly assigned randomly to receive mammography or no mammography. Those allocated to mammography were offered another four rounds of annual mammography and physical examination, those allocated to no mammography were told to remain under the care of their family doctor, thus receiving usual care in the community, although they were asked to complete four annual follow-up questionnaires. Women aged 50-59 were randomised to receive mammography or no mammography, and subsequently to receive four rounds of annual mammography and physical examination or annual physical breast examinations without mammography at their screening centre.

In reporting the results, the investigators refer to the mammography plus breast physical examination arm in both age groups as the mammography arm, and the no mammography arms (usual care for women aged 40-49 and annual breast physical examinations for women aged 50-59) as the control arm. Also, the study is often referred to in two ways. The arm for women aged 40-49 is often referred to as CNBSS-1, and the arm for women aged 50-59 is often referred to as CNBSS-2. Just to make that clear.

So let’s get to the results. But before I do, let’s look at the last times the results were reported for this study, the 13 year follow-up in 2000 for CNBSS-2 and the 11-16 year follow-up in 2002 for CNBSS-1. The first report on CNBSS-2 showed no difference in breast cancer-specific mortality between the two groups in women aged 50-59; actually, the numbers showed slightly more deaths in the screening group, but that difference was nowhere near statistically significantly different. Although there was an unwritten assumption that there was likely to be a benefit to the addition of mammographic screening that just hadn’t shown up yet because the follow-up time was too short, the authors were forced to conclude that “our estimates of effect exclude a 30% reduction in breast cancer mortality from mammography screening” and that “chance is an unlikely explanation for our findings.” In the second study reporting the results for CNBSS-2, the investigators concluded:

After 11 to 16 years of follow-up, four or five annual screenings with mammography, breast physical examination, and breast self-examination had not reduced breast cancer mortality compared with usual community care after a single breast physical examination and instruction on breast self-examination. The study data show that true effects of 20% or greater are unlikely.

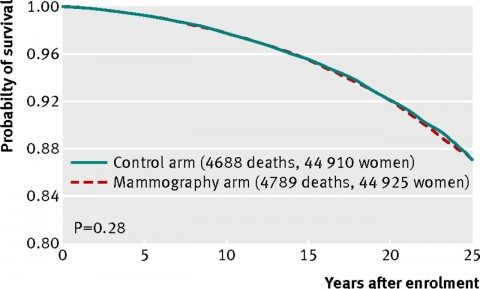

In other words, as of 2002, no benefit to adding mammography to routine care in women under 50 or to regular clinical breast examination in women 50-59 had yet been observed. The current study, unfortunately, completes the trend. Here’s the graph of all-cause mortality (all deaths of study participants):

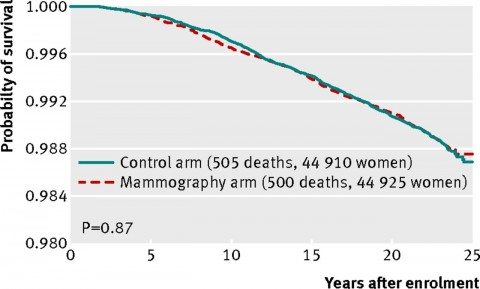

And here’s the graph for breast cancer-specific mortality (women who died of breast cancer)

As you can see, the curves line up almost exactly. There is no statistically significant difference. There’s not even a whiff of a hint of a statistically significant difference.

Now that the data are more mature, the investigators could do what they couldn’t do before, namely to make an estimate of how much overdiagnosis was occurring in the study. (Overdiagnosis is the detection of disease that doesn’t need to be treated, disease that would never progress within the lifetime of the patient to endanger her life.) The authors noted that at the end of the screening period, there was an excess of 142 breast cancer cases in the mammography arm compared to the control arm (666 versus 524). By fifteen years after enrollment, the excess became constant at 106 cancers, which was 22% of all screen-detected breast cancers. Because the mortality rates were the same between the mammography versus control groups, these cancers represent overdiagnosis.

One notes that this number is remarkably similar to the estimates of overdiagnosis found in other clinical trials and epidemiological studies of mammography that I’ve discussed over the years. For example, way back in 2008, I discussed a study that suggested that 22% of breast cancers detected by screening mammography spontaneously regress.

Whoa. Maybe I shouldn’t have been so skeptical of that result when I wrote it up. And I’m not alone in noting how strikingly similar this number is to the rate of overdiagnosis in other studies. In an accompanying editorial, Kalager et al note the same thing, pointing out that “the amount of overdiagnosis observed in the previous randomised controlled trials is strikingly similar (22-24%).” Of course, there are others, many other studies, and in fact the 22% estimate is rather at the low end of some of the more recent studies. For example, the most recent “blockbuster” mammography study estimated the rate of overdiagnosis to be between 22% and 36%, depending upon the parameters used in the investigators’ model. One study that I discussed even suggested that one in three mammography-detected cancers were in fact overdiagnosed and overtreated, and I said:

Don’t get me wrong. There is no doubt that mammographic screening programs produce a rate of overdiagnosis. The question is: What is the rate? Unfortunately, the most accurate way to measure the true rate of overdiagnosis would be a prospective randomized trial, in which one group of women is screened and another is not, that follows both groups for many years, preferably their entire life. Such a study is highly unlikely ever to be done for obvious reasons, namely cost and the fact that there is sufficient evidence to show that mammographic screening reduces breast cancer-specific mortality for women between the ages of 50 and 70 at least, the latter of which would make such a study unethical. Consequently, we’re stuck with retrospective observational studies, such as the ones analyzed in this systematic review.

Well, the CNBSS is a randomized trial that follows women for their entire lives. Whatever its flaws (which will be discussed in the next section as I try to put it into context), it’s about as close to what I wanted four and a half years ago as we’re ever likely to get, which means that an overdiagnosis rate of somewhere around 20% or so is probably about as good an estimate of overdiagnosis of breast cancer by screening mammography as we’re ever likely to get. The problem, of course, boils down to two issues. First, we can’t tell which cancers diagnosed by screening mammography are overdiagnosed; i.e., which ones will never progress within the lifetimes of the women for whom they’re detected to endanger their lives. That leaves us a mandate to treat them all. Second, there is the question of whether this level of overdiagnosis is “worth it” for the level of benefit in reducing breast cancer mortality provided by screening mammography. The first problem, of course, can be solved by better predictive tests to separate the nasty players from the overdiagnosed players, but the second question is not so easy to answer.

Another important point is that this is the only large randomized study reported in the era of effective multimodality therapy with surgery, adjuvant chemotherapy regimens (chemotherapy administered after initial treatment to reduce the risk of recurrence), adjuvant Tamoxifen (Tamoxifen blocks the action of estrogen and can be used to decrease the risk of recurrence of tumors that respond to estrogen), and radiation therapy. This brings up the question of whether the reduction in mortality from breast cancer that we have observed since 1990—contrary to what you frequently hear, mortality from breast cancer is indeed falling and has been falling since around 1990—is primarily due to better treatment rather than earlier detection. There have been studies published over the last five years that suggest that this might be the case. But is it?

The knives come out, allowing me to (try to) put it all into context

Predictably, as always happens after a study like this, the knives came out, mostly wielded by radiologists. As is often commonly the case, the criticisms were a mixture of the reasonable, the ridiculous, and the obviously turf-protecting. What’s depressing about many of the criticisms of the study is that too many of the people making them seem unaware (or seem to deny) some very basic concepts about screening, namely overdiagnosis, overtreatment, lead time bias, and length bias. I’ve discussed them all before on multiple occasions, pointing out that the early detection of cancer does not always result in improved survival, and more sensitive tests can often lead to upstaging and more aggressive therapy without benefit. I’ve discussed overdiagnosis already. Lead time bias is a situation where early detection of the cancer doesn’t result in improved survival but only appears to do so because the disease is detected earlier and the patient lives longer with it. The best explanation of overdiagnosis (besides mine, of course) I’ve ever found can be read here. Length bias simply describes the tendency of screening to detect more slowly growing, indolent tumors. These problems have led to a major rethinking of prostate cancer screening and is beginning to do the same for breast cancer screening.

Indeed, the 15-25% reduction in breast cancer mortality cited by mammography proponents translates to an absolute risk picture in which averting one death from breast cancer with mammographic screening for women between the ages of 50-70 requires screening 838 women need to be screened over 6 years for a total of 5,866 screening visits, to detect 18 invasive cancers and 6 instances of ductal carcinoma in situ (DCIS). As reported in the New York Times treatment of this study, approximately 1 in 424 women in the CNBSS received unnecessary cancer treatment. In other words, mammographic screening is very labor- and resource-intensive, and a lot of women have to be screened to save one life. As I’ve also said many times in the past, whether this is “worth it” is more a value judgment than a scientific judgment, although that value judgment has to be informed by accurate science.

Of course, the CNBSS is not without shortcomings. Indeed, it’s been attacked nearly from its earliest reports, mostly by radiologists. Indeed, it’s instructive to peruse the criticisms posted after the article (one advantage of BMJ journal articles). They range from the reasonable to real howlers. An example of the latter comes, not surprisingly, from Daniel B. Kopans, a professor of radiology at the Harvard Medical School and someone who’s well known for attacking any study that questions mammography, particularly after the USPSTF guidelines were published in 2009, and who almost a year-and-a-half ago gave us this howler:

This is simply malicious nonsense,” said Dr. Daniel Kopans, a senior breast imager at Massachusetts General Hospital in Boston. “It is time to stop blaming mammography screening for over-diagnosis and over-treatment in an effort to deny women access to screening.”

He was referring to H. Gilbert Welch’s study published in late 2012 in the New England Journal of Medicine that found a high degree of overdiagnosis due to mammography. As I pointed out at the time, Dr. Kopans was completely wrong, and overdiagnosis is a pitfall of screening programs. He’s also known for saying things like this about the members of the USPSTF task force that published a set of recommendations in 2009:

I hate to say it, it’s an ego thing. These people are willing to let women die based on the fact that they don’t think there’s a benefit.

It’s therefore not surprising that after the BMJ article, Dr. Kopans makes the same sorts of statements, statements echoed in a statement on the American College of Radiology’s website and in an article entitled We do not want to go back to the Dark Ages of breast screening, by Dr. László Tabár and Tony Hsiu-Hsi Chen, DDS, PhD published on AuntieMinnie.com, described as providing “the first comprehensive community Internet site for radiologists and related professionals in the medical imaging industry”. Many of the criticisms are shared, although Dr. Tabár does appear to me a bit disingenuous when he says that “Canadian trials could not evaluate the independent impact of mammography because of the confounding effect of physical examination.” I suppose that’s why they compared physical examination to physical examination plus mammography in the 50-59 year old group.

Dr. Kopans’ first criticism was that the quality of the mammograms was below state-of-the-art, even for the 1980s. Indeed, Dr. Kopans has made these arguments before for the last 24 years. However, as has been pointed out, the purpose of the CNBSS was to examine whether the addition of mammography added anything to breast cancer screening and resulted in decreased mortality from breast cancer using community-based settings, in other words, using mammography as it was practiced in the community. Moreover, as others have pointed out, the quality of mammography increased over time. In any case, this and many of the criticisms leveled by Dr. Kopans and others have been fairly convincingly refuted CNBSS investigator Cornelia J. Baines, who published an article entitled Rational and Irrational Issues in Breast Cancer Screening, and by an article in which Kopans himself was a coauthor, which showed that, although only 50% of mammograms had satisfactory image quality in 1980, by 1987 85% were judged to have satisfactory quality.

Perhaps the most serious charge made by Dr. Kopans is that there was misallocation of nastier cancers to the control arm. In other words, he charges:

In order to be valid, randomized, controlled trials (RCT) require that assignment of the women to the screening group or the unscreened control group is totally random. A fundamental rule for an RCT is that nothing can be known about the participants until they have been randomly assigned so that there is no risk of compromising the random allocation. Furthermore, a system needs to be employed so that the assignment is truly random and cannot be compromised. The CNBSS violated these fundamental rules (6). Every woman first had a clinical breast examination by a trained nurse (or doctor) so that they knew the women who had breast lumps, many of which were cancers, and they knew the women who had large lymph nodes in their axillae indicating advanced cancer. Before assigning the women to be in the group offered screening or the control women they knew who had large incurable cancers. This was a major violation, but it went beyond that. Instead of a random system of assigning the women they used open lists. The study coordinators who were supposed to randomly assign the volunteers, probably with good, but misguided, intentions, could simply skip a line to be certain that the women with lumps and even advanced cancers got assigned to the screening arm to be sure they would get a mammogram. It is indisputable that this happened since there was a statistically significant excess of women with advanced breast cancers who were assigned to the screening arm compared to those assigned to the control arm (7). This guaranteed that there would be more early deaths among the screened women than the control women and this is what occurred in the NBSS. Shifting women from the control arm to the screening arm would increase the cancers in the screening arm and reduce the cancers in the control arm which would also account for what they claim is “overdiagnosis”.

Make no mistake, Dr. Kopans is accusing the investigators running the CNBSS of scientific fraud here. I’m surprised he’s so bold about it. You’d think he’d have strong evidence to back up this charge. You’d be wrong. If what Dr. Kopans said were true, then the Canadian government should be going after the investigators. The authors themselves are aware of this charge and even answered it in their article:

We believe that the lack of an impact of mammography screening on mortality from breast cancer in this study cannot be explained by design issues, lack of statistical power, or poor quality mammography. It has been suggested that women with a positive physical examination before randomisation were preferentially assigned to the mammography arm.12 13 If this were so, the bias would only impact on the results from breast cancers diagnosed during the first round of screening (women retained their group assignment throughout the study). However, after excluding the prevalent breast cancers from the mortality analysis, the data do not support a benefit for mammography screening (hazard ratio 0.90, 95% confidence interval 0.69 to 1.16).

I actually agree with Dr. Kopans on this one point: Only women with no physical findings should have been randomized to screening mammography. That is perhaps the biggest flaw in the design of the CNBSS. However, excluding women diagnosed with a cancer on the first round of mammography, as the authors argue, and finding no difference in breast cancer mortality do rather argue that it probably didn’t make a difference. The authors also address another criticism, apparently leveled by Siddhartha Mukherjee in The Emperor of All Maladies, that the women in the mammography group were somehow at a higher risk for cancer. The authors point out that breast cancer was diagnosed in 5.8% of women in the mammography arm and in 5.9% of women in the control arm (P=0.80), showing that the risk of breast cancer was the same in both groups. Finally, there were reasons for why the allocation was done the way it was, and the explanation was not unreasonable:

Randomization was performed by the center coordinators after nurse examiners had clinically examined the participants. Center coordinators were blind to the results of the breast examination.

What in fact was the situation vis-a-vis randomization?

Most tellingly there was no incentive for screening personnel to subvert randomization. The CNBSS protocol required that anyone with an abnormal finding on [clinical breast examination] had to be referred to the study surgeon who would order a diagnostic mammogram when clinically indicated. Symptomatic women require diagnostic mammography, not screening mammography. It was not necessary to “place” as claimed [24] clinically positive participants in the mammography arm of the study in order for them to get a mammogram.

In the CNBSS there were more than 50 variables (demographic and risk factors) which were virtually identically distributed across control and study groups, clear evidence of successful randomization [25,26].

That certainly decreases—although it does not completely eliminate—my concern about the original design. There have also been other studies before that looked for evidence of subversion of randomization in the CNBSS and have failed to find evidence of nonrandom allocation of patients sufficient to affect the results of the trial. As was pointed out by a commenter after the BMJ article named Rolf Hefti, Dr. Kopans never mentions these studies that disagree with his conclusion, fails to note counterarguments that have been made to his accusations, and disingenuously complains about the low rate of detection by mammograms alone (32%), even though that number is consistent with rates reported in the 1990s, years after the screening period in the CBNSS ended.

And so the battle rages on, same as it ever was. What simultaneously amuses and depresses me most about this is the seeming underlying assumption that the CNBSS investigators wanted to find no benefit due to screening mammography. My guess is that they were probably horribly disappointed when they reported the first analysis and found no benefit to screening mammography and even more disappointed when the second analysis in the early 2000s found the same thing. No one does the enormous amount of work and spends the money to do a large multicenter trial involving tens of thousands of women because he wants to end up with a negative study, to the point that he would be willing to mess with the randomization to make it happen. The assumption underlying Dr. Kopans’ accusation is ludicrous.

The bottom line

I’m going to give you my bottom line, although it’s going to sound wishy-washy. Does the CNBSS “prove” that screening mammography is useless? Of course not, not any more than any single study ever could, given such a complex issue as screening for breast cancer. The CNBSS is a flawed study that could possibly be a false negative, such that any true benefit in terms of prevention of breast cancer mortality by mammography is buried in statistical noise. However, it is not nearly as flawed a study as its critics, such as Dr. Kopans and Dr. Tabár, would have you believe, nor is it a fraudulent study, as Dr. Kopans would apparently have you believe. It is the result of a group of investigators doing the best they could with the materials they had based on the knowledge they had in the late 1970s and must be weighed against all the other studies examining mammography finding benefit or no benefit. Moreover, it is not new information. It’s just a longer term follow-up of results first reported in the early 1990s and last reported more than ten years ago.

That being said, I still think it’s entirely appropriate for the study authors to conclude that “the data suggest that the value of mammography screening should be reassessed.” This isn’t a new conclusion either. It’s part of an evolution that’s been going on since before the USPSTF released its guidelines back in 2009. You’ll remember that back then I characterized those recommendations, which included not beginning routine mammographic screening until age 50, as “not the final word.” Clearly the CNBSS won’t be the last word, either, but it should be included as part of the evidence base for the reevaluation of mammography screening guidelines.

What doesn’t help is denial that overdiagnosis is a real phenomenon and rejection of the now-irrefutable contention that detecting a cancer earlier does not necessarily result in improved survival. There are some who are arguing that because patients with tumors detected by mammography-only in this study had better five year survivals than patients with tumors detected clinically, it means the mammography is worthwhile. That’s a horrible argument, because in reality the increased survival as observed in this study is, if anything, evidence in favor of overdiagnosis, an observation that was made, shockingly, in an article published in The Atlantic, given how much nonsense about medicine has been published in that magazine before. As I pointed out before, decreases in mortality, not necessarily improvements in survival, are the gold standard that shows a screening test (as opposed to a treatment) really does work.

Radiologists also argue that imaging technology is so much better today than it was in the 1980s. Even mammography itself is much better. This is undoubtedly true, but better, more sensitive imaging, while it could potentially make modern mammography screening programs more effective in preventing breast cancer, could also greatly exacerbate the problem of overdiagnosis. Overdiagnosis is real, and there are diminishing returns in the detection of cancer. If treatment of screen-detected cancers, adjusted for lead time bias, doesn’t clearly result in improved survival, then there’s a problem.

The point, obviously, is to find the “sweet spot,” which maximizes the benefit of screening and minimizes the harms due to overdiagnosis and overtreatment. Based on current evidence, of which the CNBSS is just one more part, I’m more and more of the opinion that our mammography screening guidelines need to be tweaked and personalized because the current “one size fits all” regimen is probably too aggressive for most women at average risk for breast cancer. It’s an evolution in my thought that’s been going on for years. In any case, in any statement I’d put something in there about determining what the “sweet spot” is for mammography. It’s also reasonable, for now at least, to stick with existing guidelines, with perhaps more of a personalized approach to screening of women between ages 40 and 49. That’s what I intend to do until new evidence-based guidelines emerge. And emerge they will, likely within a year.

ADDENDUM: Dr. Miller’s response to the criticisms leveled at the CNBSS have been published, and he very convincingly put Dr. Kopans and others in their place.