Category: Clinical Trials

In which we are accused of “polarization-based medicine”

A little over a month ago, I wrote about how proponents of “complementary and alternative medicine” (CAM), now more frequently called “integrative medicine,” go to great lengths to claim nonpharmacological treatments for, well, just about anything as somehow being CAM or “integrative.” The example I used was a systematic review article published by several of the bigwigs at that government font of...

Infectious Diseases and Cancer

With apologies to my colleagues, but infectious diseases really is the most interesting specialty in medicine. There are innumerable interesting associations and interactions of infectious diseases in medicine, history, art, science, and, well, life, the universe and everything. ID is so 42. A recent email led me to wander the numerous interactions between infections and cancer. There are the cancers that are...

The stem cell hard sell, Stemedica edition

Stemedica Cell Technologies, a San Diego company that markets stem cell treatments for all manner of ailments, likes to represent itself as very much science-based. There are very good reasons to question that characterization, based on the histories of the people who run the company, as well as the company's behavior.

NCCIH funds sauna “detoxification” study at naturopathic school

It is no secret that we at SBM are not particularly fond of the National Center for Complementary and Integrative Medicine (NCCIH; formerly, the National Center for Complementary and Alternative Medicine). We’ve lamented NCCIH’s use of limited public funds for researching implausible treatments, the unwarranted luster NIH/NCCIH funding bestows on quack institutions, the lack of useful research it has produced, and its...

“Non-pharmacological treatments for pain” ≠ CAM, no matter how much NCCIH wishes it so

When it comes to pain, in the mythos of "complementary and alternative medicine" (CAM), which in recent years has morphed into "integrative medicine," anything that isn't a drug is automatically rebranded as CAM, whether it's in any way "alternative" or not.

Nada for NADA: “acudetox” not effective in addiction treatment

The National Acupuncture Detoxification Association (NADA) teaches and promotes a standardized auricular acupuncture protocol, sometimes called “acudetox.” NADA claims acudetox encourages community wellness . . . for behavioral health, including addictions, mental health, and disaster & emotional trauma. I do not know what “community wellness” is or how one measures whether wellness has been successfully “encouraged.” In any event, in the NADA...

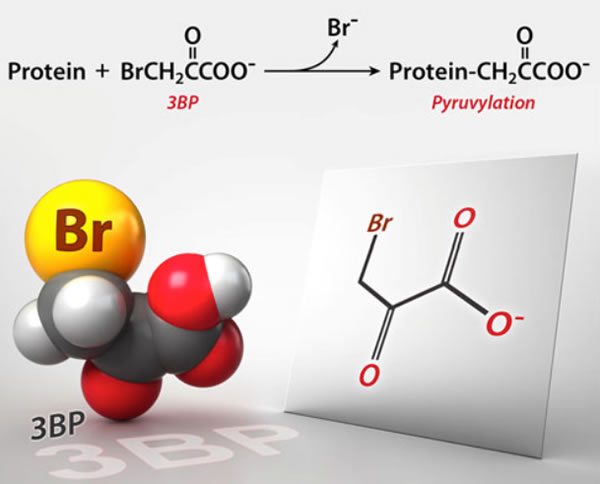

3-Bromopyruvate: The latest cancer cure “they” don’t want you to know about

Why is it that whenever naturopaths and other quacks embrace a new "cancer cure," somehow "they" (whoever "they" are) don't want you to know about it? In this case, it's 3-BP, an actual experimental drug that shows some promise but is by no means ready for prime time (or FDA approval) yet.

CARA: Integrating even more pseudoscience into veterans’ healthcare

The pixels were barely dry on David Gorski’s lament over the expansive integration of pseudoscience into the care of veterans when President Obama signed legislation that will exacerbate this very problem. The “Comprehensive Addiction and Recovery Act of 2016” (“CARA”) contains provisions that will undoubtedly keep Tracy Gaudet, MD, and her merry band of integrative medicine aficionados at the VA busy for...