Ed. Note: NOTE ADDENDUM

I daresay that I’m like a lot of you in that I spend a fair bit of time on Facebook. This blog has a Facebook page (which, by the way, you should head on over and Like immediately). I have a Facebook page, several of our bloggers, such as Harriet Hall, Steve Novella, Mark Crislip, Scott Gavura, Paul Ingraham, Jann Bellamy, Kimball Atwood, John Snyder, and Clay Jones, have Facebook pages. It’s a ubiquitous part of life, and arguably part of the reason for our large increase in traffic over the last year. There are many great things about Facebook, although there are a fair number of issues as well, mostly having to do with privacy and a tendency to use automated scripts that can be easily abused by cranks like antivaccine activists to silence skeptics refuting their pseudoscience. Also, of course, every Facebook user has to realize that Facebook makes most of its money through targeted advertising directed at its users; so the more its users reveal the better it is for Facebook, which can more precisely target advertising.

Whatever good and bad things about Facebook there are, however, there’s one thing that I never expected the company to be engaging in, and that’s unethical human subjects research, but if stories and blog posts appearing over the weekend are to be believed, that’s exactly what it did, and, worse, it’s not very good research. The study, entitled “Experimental evidence of massive-scale emotional contagion through social networks“, was published in the Proceedings of the National Academy of Sciences of the United States of America (PNAS), and its corresponding (and first) author is Adam D. I. Kramer, who is listed as being part of the Core Data Science Team at Facebook. Co-authors include Jamie E. Guillory at the Center for Tobacco Control Research and Education, University of California, San Francisco and Jeffrey T. Hancock from the Departments of Communication and Information Science, Cornell University, Ithaca, NY.

IRB? Facebook ain’t got no IRB. Facebook don’t need no IRB! Facebook don’t have to show you any stinkin’ IRB approval!” Sort of.

There’s been a lot written over a short period of time (as in a couple of days) about this study. Therefore, some of what I write will be my take on issues others have already covered. However, I’ve also delved into some issues that, as far as I’ve been able to tell, no one has covered, such as why the structure of PNAS might have facilitated a study like this “slipping through” despite its ethical lapses.

Before I get into the study itself, let me just discuss a bit where I come from in this discussion. I am trained as a basic scientist and a surgeon, but these days I mostly engage in translational and clinical research in breast cancer. The reason is simple. It’s always been difficult for all but a few surgeons to be both a basic researcher and a clinician and at the same time do both well. However, with changes in the economics of even academic health care, particularly the ever-tightening drive for clinicians to see more patients and generate more RVUs, it’s become darned near impossible. So clinicians who are still driven (and masochistic) enough to want to do research have to go with where their strengths are, and make sure there’s a strong clinical bent to their research. That involves clinical trials, in my case cancer clinical trials. That’s how I became so familiar with how institutional review boards (IRBs) work and with the requirements for informed consent. I’ve also experienced what most clinical researchers have experienced, both personally and through interactions with their colleagues, and that’s the seemingly never-ending tightening of the requirements for what constitutes true informed consent. For the most part, this is a good thing. However, at times it does appear that IRBs seem to go a bit too far, particularly in the social sciences. This PNAS study, however, is not one of them.

The mile high view of the study is that Facebook intentionally manipulated the feeds of 689,003 English-speaking Facebook users between January 11th-18th, 2012 in order to determine whether showing more “positive” posts in a user’s Facebook feed was an “emotional contagion” that would inspire the user to post more “positive” posts himself or herself. Not surprisingly, Kramer et al found that showing more “positive” posts did exactly that, at least within the parameters as defined, and that showing more “negative” posts resulted in more “negative” posting. I’ll discuss the results in more detail and problems with the study methodology in a moment. First, here’s the rather massive problem. Where is the informed consent? This is what the study says about informed consent and the ethics of the experiment:

Posts were determined to be positive or negative if they contained at least one positive or negative word, as defined by Linguistic Inquiry and Word Count software (LIWC2007) (9) word counting system, which correlates with self-reported and physiological measures of well-being, and has been used in prior research on emotional expression (7, 8, 10). LIWC was adapted to run on the Hadoop Map/Reduce system (11) and in the News Feed filtering system, such that no text was seen by the researchers. As such, it was consistent with Facebook’s Data Use Policy, to which all users agree prior to creating an account on Facebook, constituting informed consent for this research. Both experiments had a control condition, in which a similar proportion of posts in their News Feed were omitted entirely at random (i.e., without respect to emotional content).

Does anyone else notice anything? I noticed right away, both from news stories and when I finally got around to reading the study itself in PNAS. That’s right. There’s no mention of IRB approval. None at all. I had to go to a story in The Atlantic to find out that apparently the IRB of at least one of the universities involved did approve this study:

Did an institutional review board—an independent ethics committee that vets research that involves humans—approve the experiment?

Yes, according to Susan Fiske, the Princeton University psychology professor who edited the study for publication.

“I was concerned,” Fiske told The Atlantic, “until I queried the authors and they said their local institutional review board had approved it—and apparently on the grounds that Facebook apparently manipulates people’s News Feeds all the time.”

Fiske added that she didn’t want the “the originality of the research” to be lost, but called the experiment “an open ethical question.”

This is not how one should find out whether a study was approved by an IRB. Moreover, news coming out since the story broke suggests that there was no IRB approval before publication.

Also, what is meant by saying that Susan Fiske is the professor who “edited the study” is that she is the member of the National Academy of Sciences who served as editor for the paper. What that means depends on the type of submission the manuscript was. PNAS is a different sort of journal. As I’ve discussed in previous posts regarding Linus Pauling’s vitamin C quackery, about which he published papers in PNAS back in the 1970s. Back then, members of the Academy could contribute papers to PNAS as they saw fit and in essence hand pick their reviewers. Indeed, until recently, the only way that non-members could have papers published in PNAS was if a member of the Academy agreed to submit their manuscript for them, then known as “communicating” it—apparently these days known as “editing” it—and, in fact, members were supposed to take the responsibility for having such papers reviewed before “communicating them” to PNAS. Thus, in essence a member of the Academy could get nearly anything he or she wished published in PNAS, whether written by herself or a friend. Normally, this ability wasn’t such a big problem for quality, because getting into the NAS was (and is still) so incredibly difficult and only the most prestigious scientists are invited to join. Consequently, PNAS is still a prestigious journal with a high impact factor, and most of its papers are of high quality. Scientists know, however, that sometimes Academy members used to use it as a “dumping ground” to publish some of their leftover findings. They also know that on occasion, when rare members fall for dubious science, as Pauling did, they could “communicate” their questionable findings and get them published in PNAS unless they’re so outrageously ridiculous that even the deferential editorial board can’t stomach publishing them.

These days, submission requirements for PNAS are more rigorous. The standard mode is now called Direct Submission, which is still not like that for any other journal in that authors “must recommend three appropriate Editorial Board members, three NAS members who are expert in the paper’s scientific area, and five qualified reviewers.” Not very many authors are likely to be able to achieve this. I doubt I could, and I know an NAS member who’s even a cancer researcher; I’m just not sure I would want to impose on him to handle one of my manuscripts. Now, apparently, what used to be “contributed by” is referred to as submissions through “prearranged editors” (PE). A prearranged editor must be a member of the NAS:

Prior to submission to PNAS, an author may ask an NAS member to oversee the review process of a Direct Submission. PEs should be used only when an article falls into an area without broad representation in the Academy, or for research that may be considered counter to a prevailing view or too far ahead of its time to receive a fair hearing, and in which the member is expert. If the NAS member agrees, the author should coordinate submission to ensure that the member is available, and should alert the member that he or she will be contacted by the PNAS Office within 3 days of submission to confirm his or her willingness to serve as a PE and to comment on the importance of the work.

According to Dr. Fiske, there are only “a half dozen or so social psychologists” in the NAS out of over 2,000 members. Assuming Dr. Fiske’s estimate is accurate, my first guess was that this manuscript was submitted with Dr. Fiske as a prearranged editor because there is not broad representation in the NAS of the relevant specialty. Why that is, is a question for another day, but apparently it is. Oddly enough, however, my first guess was wrong. This paper was a Direct Submission, as stated at the bottom of the article itself. Be that as it may, I remain quite shocked that PNAS doesn’t, as virtually all journals that publish any human subjects research, explicitly require authors to state that they had IRB approval for their research. Some even require proof of IRB approval before they will publish. Actually, in this, PNAS clearly failed to enforce its own requirements, which require that:

- Authors must include in the Methods section a brief statement identifying the institutional and/or licensing committee approving the experiments, and

- All experiments must have been conducted according to the principles expressed in the Declaration of Helsinki

The authors did neither.

While it is true that Facebook itself is not bound by the federal Common Rule that requires IRB approval for human subjects research because it is a private company that does not receive federal grant funding and was not, as pharmaceutical and device companies do, performing the research for an application for FDA approval, the other two coauthors were faculty at universities that do receive a lot of federal funding and are therefore bound by the Common Rule. So it’s a really glaring issue that, not only is there no statement of approval from Cornell’s IRB from Jeffrey T. Hancock, but there is also no statement from Jamie Guillory that there was IRB approval from UCSF’s IRB. If there’s one thing I’ve learned in human subject ethics training at every university where I’ve had to take it, it’s that there must be IRB approval from every university with faculty involved in a study.

IRB approval or no IRB approval, the federal Common Rule has several requirements for informed consent in a checklist. Some key requirements include:

- A statement that the study involves research

- An explanation of the purposes of the research

- The expected duration of the subject’s participation

- A description of the procedures to be followed

- Identification of any procedures which are experimental

- A description of any reasonably foreseeable risks or discomforts to the subject

- A description of any benefits to the subject or to others which may reasonably be expected from the research

- A statement describing the extent, if any, to which confidentiality of records identifying the subject will be maintained

- A statement that participation is voluntary, refusal to participate will involve no penalty or loss of benefits to which the subject is otherwise entitled, and the subject may discontinue participation at any time without penalty or loss of benefits, to which the subject is otherwise entitled

The Facebook Terms of Use and Data Use Policy contain none of these elements. None. Zero. Nada. Zip. They do not constitute proper informed consent. Period. The Cornell and/or UCSF IRB clearly screwed up here. Specifically, it screwed up by concluding that the researchers had proper informed consent. The concept that clicking on an “Accept” button for Facebook’s Data Use Policy constitutes anything resembling informed consent for a psychology experiment is risible—yes, risible. Here’s the relevant part of the Facebook Data Use Policy. Among the other expected functions, there are the usual uses of your personal data that you might expect, such as measuring the effectiveness of ads and delivering relevant ads to you, making friend suggestions, and to protect Facebook’s or others’ rights and property. However, at the very end of the list is this little bit, where Facebook asserts its right to use your personal data “for internal operations, including troubleshooting, data analysis, testing, research and service improvement.”

Now, I realize that in the social sciences, depending on the intervention being tested, “informed consent” standards might be less rigorous, and there are examples where it is thought that IRBs have overreached in asserting their hegemony over the social sciences, a concern that dates back several years. What Facebook has is not “informed consent,” anyway. As has been pointed out, this is not how even social scientists define informed consent, and it’s certainly nowhere near how medical researchers define informed consent. Rather, as Will over at Skepchick points out correctly, the Facebook Data Use Policy is more like a general consent than informed consent, similar to the sort of consent form a patient signs before being admitted to the hospital:

Or, if we want to look at biomedical research, which is the kind of research that inspired the Belmont Report, Facebook’s policy is analogous to going to a hospital, signing a form that says any data collected about your stay could be used to help improve hospital services, and then unknowingly participating in a research project where psychiatrists are intentionally pissing off everyone around you to see if you also get pissed off, and then publishing their findings in a scientific journal rather than using it to improve services. Do you feel that you were informed about being experimented on by signing that form?

That’s exactly why “consents to treat” or “consents for admission to the hospital” are not consents for biomedical research. There are minor exceptions. For instance, some consents for surgery include consent to use any tissue removed for research.

In fairness, it must be acknowledged that there are criteria under which certain elements of informed consent can be waived by an IRB. The relevant standard comes from §46.116 and requires that:

- D: 1. The research involves no more than minimal risk to the subjects;

- D: 2. The waiver or alteration will not adversely affect the rights and welfare of the subjects;

- D: 3. The research could not practicably be carried out without the waiver or alteration; and

- D: 4. Whenever appropriate, the subjects will be provided with additional pertinent information after participation.

Here’s where we get into gray areas. Remember, all of these conditions have to apply before a waiver of informed consent can occur, and clearly all of them do not. D1, for instance, is likely true of this research, although from my perspective D2 is arguable at best, particularly if you believe that users of a commercial company’s service should have the right to know what is being done with their information. D3 is arguable either way. For example, it’s not hard to imagine sending out a consent to all Facebook users, and, given that Facebook has over a billion users, it’s not unlikely that hundreds of thousands would say yes. In contrast, D4 appears not to have been honored. There’s no reason Facebook couldn’t have informed the actual users who were monitored after the study was over what had been done. Even if one could agree that conditions D1-3 were already met, the IRB should have insisted on D4, because there’s no reason to suspect that doing so would have been inappropriate in the sense of altering the outcome of the experiment. No matter how you slice it, there was a serious problem with the informed consent for this study on multiple levels.

Even Susan Fiske admits that she was “creeped out” by the study. To me, that’s a pretty good indication that something’s not ethically right; yet she edited it and facilitated its publication in PNAS anyway. She also doesn’t understand the Common Rule:

“A lot of the regulation of research ethics hinges on government supported research, and of course Facebook’s research is not government supported, so they’re not obligated by any laws or regulations to abide by the standards,” she said. “But I have to say that many universities and research institutions and even for-profit companies use the Common Rule as a guideline anyway. It’s voluntary. You could imagine if you were a drug company, you’d want to be able to say you’d done the research ethically because the backlash would be just huge otherwise.”

No, no, no, no! The reason drug companies follow the Common Rule is because the FDA requires them to. Data from research not done according to the Common Rule can’t be used to support an application to the FDA to approve a drug. It’s also not voluntary for faculty at universities that receive federal research grant funding if those universities have signed on to the Federalwide Assurance (FWA) for the Protection of Human Subjects (as Cornell has done and UCSF appears to have done), as I pointed out when I criticized Dr. Mehmet Oz’s made-for-TV green coffee bean extract study. Nor should it be, and I am unaware of a major university that has refused to agree to “check the box” in the FWA promising that all its human subjects research will be subject to the Common Rule, which makes me wonder how truly “voluntary” it is to agree to be bound by the Common Rule. Moreover, as I was finishing this post, I learned that the study actually did receive some federal funding through the Army Research Office, as described in the Cornell University press release. [NOTE ADDENDUM ADDED 6/30/2014.] IRB approval was definitely required. I note that I couldn’t find any mention of such funding in the manuscript itself.

The study itself

The basic finding of the study, namely that people alter their emotions and moods based upon the presence or absence of other people’s positive (and negative) moods, as expressed on Facebook status updates, is nothing revolutionary. It’s about as much a, “Well, duh!” finding as I can imagine. The researchers themselves referred to this effect an “emotional contagion,” because their conclusion was that Facebook friends’ words that users see on their Facebook news feed directly affected the users’ moods. But is the effect significant, and does the research support the conclusion? The researchers’ methods were described thusly in the study:

The experiment manipulated the extent to which people (N = 689,003) were exposed to emotional expressions in their News Feed. This tested whether exposure to emotions led people to change their own posting behaviors, in particular whether exposure to emotional content led people to post content that was consistent with the exposure—thereby testing whether exposure to verbal affective expressions leads to similar verbal expressions, a form of emotional contagion. People who viewed Facebook in English were qualified for selection into the experiment. Two parallel experiments were conducted for positive and negative emotion: One in which exposure to friends’ positive emotional content in their News Feed was reduced, and one in which exposure to negative emotional content in their News Feed was reduced. In these conditions, when a person loaded their News Feed, posts that contained emotional content of the relevant emotional valence, each emotional post had between a 10% and 90% chance (based on their User ID) of being omitted from their News Feed for that specific viewing. It is important to note that this content was always available by viewing a friend’s content directly by going to that friend’s “wall” or “timeline,” rather than via the News Feed. Further, the omitted content may have appeared on prior or subsequent views of the News Feed. Finally, the experiment did not affect any direct messages sent from one user to another.

And:

For each experiment, two dependent variables were examined pertaining to emotionality expressed in people’s own status updates: the percentage of all words produced by a given person that was either positive or negative during the experimental period (as in ref. 7). In total, over 3 million posts were analyzed, containing over 122 million words, 4 million of which were positive (3.6%) and 1.8 million negative (1.6%).

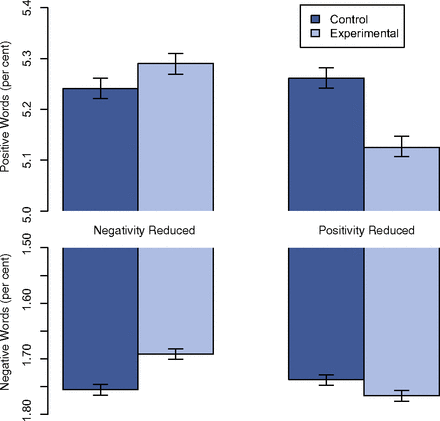

The results were summed up in a single deceptive chart. Why do I call the chart deceptive? Easy, because charts were done in such a way as to make the effect look much larger by starting the y-axis at 5.0 in the graph that shows a difference between around 5.3 and 5.25 and starting it at 1.5 for a graph that showed a difference between 1.75 and maybe 1.73. See what I mean by looking at Figure 1:

This is another thing the authors did that I can’t believe Dr. Fiske and PNAS let them get away with, as messing with where the y-axis of a graph starts in order to make a tiny effect look bigger is one of the most obvious tricks there is. In this case, given how tiny the effect is, even if it was a statistically significant effect, it’s highly unlikely to be what we call a clinically significant effect.

But are these valid measures? John M. Grohol of PsychCentral was unimpressed, pointing out that the tool that the researchers used to analyze the text was not designed for short snippets of text, asking snarkily, “Why would researchers use a tool not designed for short snippets of text to, well… analyze short snippets of text?” and concluding that it was because the tool chosen, the LIWC, is one of the few tools that can process large amounts of text rapidly. He then went on to describe why it’s a poor tool to apply to a Tweet or a brief Facebook status update:

Length matters because the tool actually isn’t very good at analyzing text in the manner that Twitter and Facebook researchers have tasked it with. When you ask it to analyze positive or negative sentiment of a text, it simply counts negative and positive words within the text under study. For an article, essay or blog entry, this is fine — it’s going to give you a pretty accurate overall summary analysis of the article since most articles are more than 400 or 500 words long.

For a tweet or status update, however, this is a horrible analysis tool to use. That’s because it wasn’t designed to differentiate — and in fact, can’t differentiate — a negation word in a sentence.

Let’s look at two hypothetical examples of why this is important. Here are two sample tweets (or status updates) that are not uncommon:

“I am not happy.”

“I am not having a great day.”An independent rater or judge would rate these two tweets as negative — they’re clearly expressing a negative emotion. That would be +2 on the negative scale, and 0 on the positive scale.

But the LIWC 2007 tool doesn’t see it that way. Instead, it would rate these two tweets as scoring +2 for positive (because of the words “great” and “happy”) and +2 for negative (because of the word “not” in both texts).

That’s a huge difference if you’re interested in unbiased and accurate data collection and analysis.

Indeed it is. So not only was this research of questionable ethics, it wasn’t even particularly good research. I tend to agree with Dr. Gohol that most likely these results represent nothing more than “statistical blips.” The authors even admit that the effects are tiny. Yet none of that stops them from concluding that their “results indicate that emotions expressed by others on Facebook influence our own emotions, constituting experimental evidence for massive-scale contagion via social networks.” Never mind that they never measured a single person’s emotions or mood states.

“Research” to sell you stuff

Even after the firestorm that erupted this weekend, Facebook unfortunately still doesn’t seem to “get it”, as is evident from its response to the media firestorm yesterday:

This research was conducted for a single week in 2012 and none of the data used was associated with a specific person’s Facebook account,” says a Facebook spokesperson. “We do research to improve our services and to make the content people see on Facebook as relevant and engaging as possible. A big part of this is understanding how people respond to different types of content, whether it’s positive or negative in tone, news from friends, or information from pages they follow. We carefully consider what research we do and have a strong internal review process. There is no unnecessary collection of people’s data in connection with these research initiatives and all data is stored securely.

This is about as tone deaf and clueless a response as I could have expected. Clearly, it was not a researcher, but a corporate drone who wrote the response. Even so, he or she might have had a point if the study were strictly observational. But it wasn’t. It was an experimental study; i.e., an interventional study. An intervention was made directed at one group as compared to a control group, and the effects measured. That the investigators used a poor tool to measure such effects doesn’t change the fact that this was an experimental study, and, quite rightly, the bar for consent and ethical approval is higher for experimental studies. Facebook failed in that and still doesn’t get it. As Kashmir Hill put it, while many users may already expect and be willing to have their behavior studied, they don’t expect that Facebook will actively manipulate their environment in order to see how they react. On the other hand, Facebook has clearly been doing just that for years. Remember, its primary goal is to get you to pay attention to the ads it sells, so that it can make money.

Finally, there is one final “out” that Facebook might claim by lumping its “research” and “service improvement” together. In medicine, quality improvement initiatives are not considered “research” per se and do not require IRB approval. I’m referring to initiatives, for instance, to measure surgical site infections and look for situations or markers that predict them, the purpose being to reduce the rate of such infections by intervening to correct the issues discovered. I myself am heavily involved in just such a collaborative to examine various factors, most critically adherence to evidence-based guidelines, as an indicator of quality.

Facebook might try to claim that its “research” was in reality for “service” improvement, but if that’s the case that would be just as disturbing. Think about it. What “service” is Facebook “improving” through this research? The answer is obvious: Its ability to manipulate the emotions of its users in order to better sell them stuff. Don’t believe me? Here’s what Sheryl Sandberg, Facebook’s chief operations officer, said recently:

Our goal is that every time you open News Feed, every time you look at Facebook, you see something, whether it’s from consumers or whether it’s from marketers, that really delights you, that you are genuinely happy to see.

As if that’s not enough, here it is, from the horse’s mouth, that of Adam Kramer, corresponding author:

Q: Why did you join Facebook?

A: Facebook data constitutes the largest field study in the history of the world. Being able to ask–and answer–questions about the world in general is very, very exciting to me. At Facebook, my research is also immediately useful: When I discover something, we can use this to make improvements to the product. In an academic position, I would have to have a paper accepted, wait for publication, and then hope someone with the means to usefully implement my work takes notice. At Facebook, I just message someone on the right team and my research has an impact within weeks if not days.

I don’t think it can be said much clearer than that.

As tempting of a resource as Facebook’s huge amounts of data might be to social scientists interested in studying online social networks, social scientists need to remember that Facebook’s primary goal is to sell advertising, and therefore any collaboration they strike up with Facebook information scientists will be designed to help Facebook accomplish that goal. That might make it legal for Facebook to dodge human subjects protection guidelines, but it certainly doesn’t make it ethical. That’s why social scientists must take extra care to make sure any research using Facebook data is more than above board in terms of ethical approval and oversight, because Facebook has no incentive to do so and doesn’t even seem to understand why its research failed from an ethical standpoint. Jamie Guillory, Jeffrey Hancock, and Susan Fiske failed to realize this and have reaped the whirlwind.

ADDENDUM: Whoa. Now Cornell University is claiming that the study received no external funding and has tacked an addendum onto its original press release about the study.

Also, as reported here and elsewhere, Susan Fiske is now saying that the investigators had Cornell IRB approval for using a “preexisting data set”:

I just heard back from the Facebook study editor on the question of whether researchers used an IRB. pic.twitter.com/kCoEp1LNJ8

— Adrienne LaFrance (@AdrienneLaF) June 30, 2014

Also, yesterday after I had finished writing this post, Adam D. I. Kramer published a statement, which basically confirms that Facebook was trying to manipulate emotions and in which he apologized, although it sure sounds like a “notpology” to me.

Finally, we really do appear to be dealing with a culture clash between tech and people who do human subjects research, as described well here:

Academic researchers are brought up in an academic culture with certain practices and values. Early on they learn about the ugliness of unchecked human experimentation. They are socialized into caring deeply for the well-being of their research participants. They learn that a “scientific experiment” must involve an IRB review and informed consent. So when the Facebook study was published by academic researchers in an academic journal (the PNAS) and named an “experiment”, for academic researchers, the study falls in the “scientific experiment” bucket, and is therefore to be evaluated by the ethical standards they learned in academia.

Not so for everyday Internet users and Internet company employees without an academic research background. To them, the bucket of situations the Facebook study falls into is “online social networks”, specifically “targeted advertising” and/or “interface A/B testing”. These practices come with their own expectations and norms in their respective communities of practice and the public at large, which are different from those of the “scientific experiment” frame in academic communities. Presumably, because they are so young, they also come with much less clearly defined and institutionalized norms. Tweaking the algorithm of what your news feed shows is an accepted standard operating procedure in targeted advertising and A/B testing.

This, I think, accounts for the conflict between the techies, who shrug their shoulders and say, NBD, and medical researchers like me who are appalled by this experiment.