As I contemplated what I’d like to write about for the first post of 2012, I happened to come across a post by former regular and now occasional SBM contributor Peter Lipson entitled Another crack at medical cranks. In it, Dr. Lipson discusses one characteristic that allows medical cranks and quacks to attract patients, namely the ability to make patients feel wanted, cared for, and, often, happy. As I (and several of us at SBM) have said before, it’s not necessary to invoke magic, quackery, or pseudoscience in order to show empathy to patients and provide them with the “human touch” that forges a strong therapeutic relationship between physician and patient and maximizes placebo effects without deception. In the old days, this used to be called “bedside manner,” but in these days of capitation and crappy third party payor reimbursement it’s very difficult for physicians to take the time necessary to listen to patients and thereby build the bonds of trust and mutual respect that can augment the treatments that are prescribed. Unfortunately, because of this the quacks have been all too eager to leap into the breach.

One aspect of this tendency of medical cranks is to claim that they somehow “individualize” their treatment to the patient, as Peter points out:

There are a number of so-called holistic doctors in town who claim to practice “individualized” medicine. What this really means isn’t clear. My colleagues and I certainly individualize the treatment plans for all of our patients, using data gleaned from decades of scientific studies of large groups of patients. What “individualized” care seems to mean in this other context is “stuff I made up to make that patient feel more unique and special.”

I put it slightly differently when I wrote about the very same phenomenon a couple of years ago. Having come across a “success” story told by an “alternative practitioner” in which a patient was shuttled from treatment to treatment to treatment over a long period of time before finally getting better on her own (an improvement that, predictably, the last practitioner, a homeopath, took credit for), I described the problem with “individualized” treatments in “alternative” medicine, “complementary and alternative medicine” (CAM), “integrative medicine” (IM), or whatever you want to call it:

Here’s the problem with “individualized” treatments. Taken to an extreme, as many alternative medicine practitioners do, “individualization” becomes in essence an excuse to do whatever the heck the practitioner feels like and not to have to list diagnostic criteria or show actual efficacy of their treatments in a way that others can replicate. Look at Dr. Elliott’s statement: “Each patient was so unique that what cured one person might have no effect on the next.” Certainly, organisms such as humans can and do show considerable variability in their biology and response to treatment, but rarely so much that what “cures” one person will have no effect on the next. Such extreme emphasis of “individualization” is virtually custom-designed to lead to exactly the sort of marathon trial-and-error treatment histories he described.

A corollary to this claim of extreme “individualization” by CAM practitioners such as homeopaths is the frequent assertion by promoters of non-science-based medical treatments that science- and evidence-based medicine (SBM/EBM) can’t study their woo because its “gold standard” for determining whether a treatment “works” is the controlled randomized clinical trial (RCT) and RCTs can’t study therapies that are so “individualized.” This assertion is, of course, a Tokyo-sized straw man being gleefully destroyed by Godzilla-sized woo in that SBM/EBM is not just about RCTs (particularly SBM). It’s also just plain wrong in that it is indeed quite possible to come up with designs that take into account patient individualization. True, it’s more difficult, but it’s by no means impossible. Nonetheless, the cry that “RCTs can’t study my woo because it’s ‘individualized'” continue to go up in the alt-med blogosphere, most recently when a homeopath named Judith Acosta posted just such an argument at—where else?—that wretched hive of scum and quackery, The Huffington Post in the form of a two-part post entitled A personal case for classical homeopathy:

The problem is that homeopathy is aimed at treating the individual with a single remedy, chosen specifically for him or her. It is not for treating masses of people with the same pill. Twenty people could have the “same” flu, but each one would need a different remedy (not necessarily Oscillococcinum) and be rightly cured because each one would manifest illness in a way that is utterly unique to him-/herself. We always treat the person, not the disease. As such it is exceedingly difficult, if not impossible to replicate homeopathic treatment the way pharmaceutical companies try to do in drug trials.

Yes, Acosta is actually making the argument that individuals are so completely unique that no two of them will require the same treatment for the same disease, just as like the example I used two years ago from Dr. Travis Elliot, who could actually proclaim with apparently a straight face that “each patient was so unique that what cured one person might have no effect on the next.” Of course, the silly part of this extreme “individualization” of treatment is that there are really no commonly agreed-upon standards based in objective evidence to guide practitioners in “individualizing” therapy. It’s not for nothing that I refer to this sort of “personalized medicine” as “making it up as you go along,” a spectrum that ranges from the sort of claims a homeopath like Acosta makes to the way Dr. Stanislaw Burzynski co-opts the language of genomics in order to justify his own version of “make it up as you go along”-style “personalized medicine.”

Faux “individualization” versus science

Part and parcel of this faux “individualization” advocated by various CAM promoters is an intense need to attack EBM/SBM as the enemy of such “individualization.” At least, that is the rationale. In reality, practitioners of pseudoscientific medical systems and treatments recognize, either implicitly or explicitly, that whenever their woo is tested through the scientific method and RCTs it fails. A real scientist or practitioner of SBM when faced with such a result would abandon the therapy that fails scientific validation. These pseudoscientific treatments, however, are more about belief than science, and when belief collides with science the believer must somehow find a reason to discount or reject science. That is the reason for the extreme hostility to EBM/SBM among physicians and scientists who ascribe to pseudoscientific or mystical belief systems like homeopathy, energy healing, acupuncture, traditional Chinese medicine, Ayurveda, and other prescientific medical belief systems that cling to medicine like kudzu and whose roots slowly destroy the scientific basis of medicine. I’ve seen this many times before and have even addressed it at least a couple of times on this blog, for instance, when Dr. Andrew Weil launched a rather furious broadside at EBM just last year in which he used the common straw man that EBM is only about RCTs.

I saw a more sophisticated version of the sorts of attacks on SBM/EBM made by apologists for quackery just before the holidays in the form of two articles. One appeared on Gaia Health and was entitled Evidence-based medicine is a fraud. Here’s why. It was based on an article voicing similar sentiments that appeared on Orthomolecular.org, which is a form of megavitamin supplementation quackery embraced by Linus Pauling in his later years when he became enamored of the concept that he could cure cancer and the common cold with enormous doses of vitamin C, and advertises its love of “individualization” and “personalization” in its slogan, “Therapeutic nutrition based upon biochemical individuality.” This slogan amuses me to no end, given that the motto of orthomolecular medicine seems to be, “If some vitamins are good, more must be better. A lot more.” In any case, the other article is by Steve Hickey, PhD and Hilary Roberts, PhD and entitled Evidence-Based Medicine: Neither Good Evidence nor Good Medicine. Combined, these articles invoke a collection of straw man arguments, obvious and simple criticisms of EBM that do not come close to invalidating the usefulness of EBM, and a hilariously inapt analogy, all in a lecturing tone, complete with “lessons” in statistics. In particular, these articles implicitly and explicitly argue for the inclusion of “all data,” including lousy data, the purpose of which, obviously, is to lower the bar for evidence for the pseudoscience and pseudomedicine they want to promote.

I’ll show you what I mean.

Let’s start with the Gaia Health article first, because it’s much easier to dispose of because (1) it’s not original, parroting as it does, the arguments of the the Orthomolecular.org article, and (2) it dumbs down even its already transparent source material. In fact, it can best be summarized by its conclusion:

At best, Evidence Based Medicine is pseudo science or junk science. It’s a fraud designed to give the impression that statistics derived from studies can possibly tell us much of value about how to deal with or treat an individual human.

And:

The reality, though, is that EBM is fraudulent. It gives the impression of proof for efficacy of medical treatment, but is largely a smokescreen designed to sell medical products.

This latter charge, of course, smoked my irony meter, melting it into a quivering, bubbling blob of liquid plastic and copper. The reason is that attacking EBM in this manner is largely a smokescreen designed (1) to give the appearance that pseudoscience is legitimate science by attacking legitimate science and (2) thereby to sell products and services, such as supplements and treatments like acupuncture. Predictably, the first attack added to the attacks by Hickey and Roberts that are repeated is, in essence, the “pharma shill gambit“:

T

hat, of course, is the bottom line. It’s why the term EBM is invoked—to give the impression that medical treatments are based on meaningful research. The purpose isn’t to produce research that benefits patients. The purpose is to produce research that benefits the pockets of Big Pharma and Big Medicine.

No one who defends EBM/SBM claims that it is perfect or denies that there are problems with it and that money from pharmaceutical companies can be a malign influence that often doesn’t serve science, medicine, or patients. However, from the criticisms in these articles, it’s quite obvious that, even if all pharma money were removed from the drug approval process, even if profit were not a consideration ever, none of the authors of the articles would still approve of EBM. The reason is simple. They are interested in promoting medical modalities that are not supported in science or evidence and want to find a way to make it seem as though they are. The way to do that is to attack EBM as currently configured, often (as in this case) invoking the “individualization” gambit. For instance, the Gaia Health article rails against the “one size fits all” medicine, which is all well and good, except that the very essence of practicing EBM is applying what is known about clinical trials to individual patients, something Peter has emphasized time and time again. More on that later. In the meantime, let’s look at the article that inspired it all.

Hickey and Roberts make their disdain for EBM apparent right from the opening paragraphs:

Evidence-based medicine (EBM) is the practice of treating individual patients based on the outcomes of huge medical trials. It is, currently, the self-proclaimed gold standard for medical decision-making, and yet it is increasingly unpopular with clinicians. Their reservations reflect an intuitive understanding that something is wrong with its methodology. They are right to think this, for EBM breaks the laws of so many disciplines that it should not even be considered scientific. Indeed, from the viewpoint of a rational patient, the whole edifice is crumbling.

The assumption that EBM is good science is unsound from the start. Decision science and cybernetics (the science of communication and control) highlight the disturbing consequences. EBM fosters marginally effective treatments, based on population averages rather than individual need. Its mega-trials are incapable of finding the causes of disease, even for the most diligent medical researchers, yet they swallow up research funds. Worse, EBM cannot avoid exposing patients to health risks. It is time for medical practitioners to discard EBM’s tarnished gold standard, reclaim their clinical autonomy, and provide individualized treatments to patients.

No, no, no, no, no.

This is a massive straw man, a caricature of EBM. For example, no one (at least no one whom I know or whose work I read) claims that EBM “mega-trials” can find the cause of disease. That’s not what they are designed for; they’re designed to determine whether therapies are efficacious and safe. Determining the cause of disease depends on a combination of basic science, clinical observations, and clinical trials. In other words, it depends upon the totality of scientific evidence, of which clinical trials are just a part. It doesn’t take Hickey and Roberts long to complain about how badly they think Linus Pauling was treated for his advocacy of vitamin C quackery. I don’t know about you, but when I see complaints like this, I know I’m dealing not with a science-based critique of anything, but rather cranks complaining that they are not taken seriously. In fact, when I see them complain that EBM in practice means “relying on a few large-scale studies and statistical techniques to choose the treatment for each patient,” right before complaining about how Linus Pauling was criticized for using megadoses of vitamin C to treat cancer, I can’t help but think their real purpose is incredibly obvious.

Legitimate versus illegitimate complaints about EBM

So what complaints against EBM are encompassed in this article? Remember, several of us on this blog criticize EBM fairly frequently, particularly Kimball Atwood. You might think that, even in this article, we might find something to agree with. You’d be (mostly) wrong. Hickey and Roberts, amazingly, are highly talented at making what I like to call “Well, duh!” criticisms of EBM. For instance, they make a great show of pointing out that “statistically significant” doesn’t necessarily mean “significant.” As I said, “Well, duh!” That’s a very basic principal that virtually every physician knows. In fact, when we discuss clinical trials in various venues, we argue all the time about whether a “statistically significant” result is clinically significant when the difference is small. We discuss these sorts of issues on SBM all the time. Yet Hickey and Roberts seem to think they are the first people to have noticed that EBM can sometimes detect differences that are probably not clinically significant. In fact, it’s painfully obvious that neither Hickey nor Roberts is a physician or has ever taken care of a patient in their lives from this incredibly simplistic analogy that they seem to consider highly profound:

To explain this, suppose we measured the foot size of every person in New York and calculated the mean value (total foot size/number of people). Using this information, the government proposes to give everyone a pair of average-sized shoes. Clearly, this would be unwise-the shoes would be either too big or too small for most people. Individual responses to medical treatments vary by at least as much as their shoe sizes, yet despite this, EBM relies upon aggregated data. This is technically wrong; group statistics cannot predict an individual’s response to treatment.

Well, yes and no. Group statistics can’t predict precisely an individual’s response to treatment, but if a clinician combines group statistics, biomarkers, and considerations of the patient’s other clinical variables, it is possible to estimate whether a treatment is likely to work in a patient and what the odds are that it will work. Hickey and Roberts, for all their invocations of complexity later in their article, seem very prone to binary thinking. To them, either a treatment works or it doesn’t, and they think that EBM results can’t inform or predict whether a treatment will work. This is also nihilistic thinking, in which Hickey and Roberts fall for the “fallacy of the perfect solution.” To them, if EBM isn’t perfect, then it’s crap. If it’s not painfully obvious how to apply RCT results to individual patients, then RCTs are crap.

This same sort of simplistic thinking infuses the entire analogy above, which seems profound on a quick superficial reading but if you look at it more closely you’ll see that it’s a parody, a straw man, if you will, of how EBM is practiced. In the analogy used above, the way that EBM would treat it would be to try to estimate how many people fall into different ranges of shoe sizes and then to buy a range of shoe sizes to encompass as much of the population as possible in the right proportions. Think of it as taking into account other clinical indicators, biomarkers, and the patients’ clinical characteristics. Is that solution perfect? No, of course not. Will there be people whose shoe sizes won’t be accommodated? Of course, but they will (usually) be in the minority.

The next thing that Hickey and Roberts doesn’t like about EBM is that it “selects” evidence. They complain about how meta-analyses leave out studies that don’t meet strict criteria for study quality and how EBM relies on “best evidence,” as though that were a bad thing. They present an example in which a graph is fit to a curve by removing data and lecturing us that “one of the first lessons for science students is to not select the best evidence; all data must be considered.” Again, this is a misleading comparison. It is true that we don’t want to “cherry pick” evidence, which is what Hickey and Roberts are referring to, but on the other hand evidence that is less reliable should be deemphasized or thrown out, and that’s all that meta-analyses and the EBM emphasis on “best evidence” do. One can argue about what is defined as “best evidence,” and, in fact, several of us have criticized EBM’s reliance on frequentist statistics such as what cause Hickey and Roberts so much agita. Indeed, many are the times that we’ve complained how EBM’s “best evidence” overemphasizes RCTs and downplays basic science considerations and prior plausibility. Somehow, though, I strongly suspect that Hickey and Roberts aren’t about taking these aspects into account. Rather, they seem, more than anything else, to be about including anecdotal and observational evidence, hence the emphasis on including “more” kinds of evidence.

Perhaps the most ridiculous argument Hickey and Roberts make is this one:

The problems with EBM continue. It breaks other fundamental laws, this time from the field of cybernetics, which is the study of systems control and communication. The human body is a biological system and, when something goes wrong, a medical practitioner attempts to control it. To take an example, if a person has a high temperature, the doctor could suggest a cold compress; this might work if the person was hot through over-exertion or too many clothes. Alternatively, the doctor may recommend an antipyretic, such as aspirin. However, if the patient has an infection and a raging fever, physical cooling or symptomatic treatment might not work, as it would not quell the infection.

In the above case, a doctor who overlooked the possibility of infection has not applied the appropriate information to treat the condition. This illustrates a cybernetic concept known as requisite variety, first proposed by an English psychiatrist, Dr. W. Ross Ashby. In modern language, Ashby’s law of requisite variety means that the solution to a problem (such as a medical diagnosis) has to contain the same amount of relevant information (variety) as the problem itself. Thus, the solution to a complex problem will require more information than the solution to a straightforward problem. Ashby’s idea was so powerful that it became known as the first law of cybernetics. Ashby used the word variety to refer to information or, as an EBM practitioner might say, evidence.

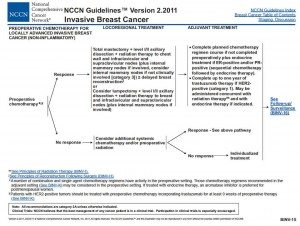

While this is an interesting speculation, Hickey and Roberts do not present any evidence to suggest that cybernetics control and communication apply to biological systems in this way. In any case, whenever you see someone trying to apply a “fundamental law” of one field to another field, be very, very skeptical. Ashby’s law, as described above, is at best tangential to the problem of taking care of patients in that physicians applying EBM already do take into account more information to solve complex problems than they do to solve simple problems. The same is true of designing clinical trials to test treatments for complex versus simpler clinical problems. In fact, take a look at the “levels of evidence” paradigm of EBM. That’s hardly “simple.” Take a look at some actual EBM guidelines. Muy favorite example is the National Comprehensive Cancer Network (NCCN) guidelines published for nearly every cancer. I use the NCCN guidelines for breast cancer because I’m most familiar with them. The 2011 guidelines take up 148 pages, packed with text, graphs, decision trees and discussions of areas of uncertainty:

I intentionally picked one of the simpler sets of guidelines for breast cancer.

Hickey and Roberts make it sound as though applying EBM is as simple as looking at a clinical trial or two, taking the results, and applying them to a patient. EBM might well have its deficiencies, but Hickey and Roberts, intentionally, I believe, make EBM seem simplistic to the point that it paints physicians as simpletons, when in reality applying EBM guidelines like the ones above is anything but simple. It requires clinical judgment and the ability to fit an individual patient into our knowledge base and determine what will likely be the best treatment, both of which require a deep understanding of the clinical evidence. Even if Ashby’s law applied to human disease, I would argue that EBM guidelines roughly follow it. Hickey and Roberts’ parody of EBM is intentionally designed so that it does not.

It doesn’t exactly help my confidence in Hickey and Roberts to see that they don’t quite seem to understand what the ecological fallacy is. The describe it as “wrongly using group statistics to make predictions about individuals.” This is not quite correct. I’ve written about the ecological fallacy before, and that’s not exactly what it means. In general, the ecological fallacy is used in epidemiology and tends to refer to making group-level analysis and imputing predictions for individuals based on it. In epidemiology, this means taking large group averages for which individual-level data is not available and trying to make correlations based on them. However, doing this can introduce bias and exaggerate apparent correlations compared to doing the same analysis using individual-level data. The reason the ecological fallacy can be a problem in making inferences is because of confounding factors that might be the real explanation for any correlations observed. In other words, the problem with the ecological fallacy is that it fails to take adequately into consideration confounding factors, which can be more easily done with individual-level data. RCTs, in contrast, are carefully designed and controlled and use individual-level data. While it’s not entirely wrong to be concerned about applying the results of RCTs to individual patients, to refer to doing so as the “ecological fallacy” (i.e., wrongly attributing group-level correlations to individuals) is a bit of a stretch. RCTs, after all, are not the sort of group-level comparisons that the ecological fallacy refers to.

Having identified what they think to be the problems with EBM, Hickey and Roberts then converge upon a solution that destroyed another of my irony meters:

Doctors must encompass enough knowledge and therapeutic variety to match the biological diversity within their population of patients. The process of classifying a particular person’s symptoms requires a different kind of statistics (Bayesian), as well as pattern recognition. These have the ability to deal with individual uniqueness.

As I’ve pointed out, Kimball Atwood has extensively argued for Bayesian statistics. Part and parcel of Bayesian statistics is estimating prior probability based on basic science. Little do Hickey and Roberts realize that a proper application of Bayesian statistics to the sorts of treatments encompassed by CAM would not help them. Not at all. There’s a reason for an even greater hostility towards the concept of SBM than towards EBM among CAM promoters. The Bayes factor for homeopathic remedies, for instance, would conclude that the prior probability of its working approximates zero based on its claimed principles of action. Ditto reiki, therapeutic touch, and basically any form of “energy healing.” And, I might add, although its prior probability is not at homeopathic levels given that it involves giving actual chemical substances, Bayes would not be kind to orthomolecular medicine either, which is very good at sounding scientific but in practice boils down to giving megadoses of vitamins and other nutrients. As for “pattern recognition,” who knows what they mean by that? Actually, on second thought I think I do know what they mean by that. As I like to say, to purveyors of woo, “pattern recognition” means seeing what they want to see based on anecdotal “clinical experience” or small clinical trials and acting based on that.

In fact, this passage right here leads me to think their attitude towards data is at the very least confused and at the very worst intentionally deceptive:

Population statistics do not capture the information needed to provide a well-fitting pair of shoes, let alone to treat a complex and particular patient. As the ancient philosopher Epicurus explained, you need to consider all the data.

Restricting our information to the “best evidence” would be a mistake, but it is equally wrong to go to the other extreme and throw all the information we have at a problem. Just as Goldilocks in the fairy-tale wanted her porridge “neither too hot, nor too cold, but just right” doctors must select just the right information to diagnose and treat an illness. The problem of too much information is described by the quaintly-named curse of dimensionality, discussed further below.

Later, they write:

In their models and explanations, scientists aim for simplicity. By contrast, EBM generates large numbers of risk factors and multivariate explanations, which makes choosing treatments difficult. For example, if doctors believe a disease is caused by salt, cholesterol, junk food, lack of exercise, genetic factors, and so on, the treatment plan will be complex. This multifactorial approach is also invalid, as it leads to the curse of dimensionality. Surprisingly, the more risk factors you use, the less chance you have of getting a solution. This finding comes directly from the field of pattern recognition, where overly complex solutions are consistently found to fail. Too many risk factors mean that noise and error in the model will overwhelm the genuine information, leading to false predictions or diagnoses.

Yes, you read it right. Hickey and Roberts are simultaneously criticizing EBM for being too simplistic but at the same time criticizing it for being too complex and making it difficult to make treatment decisions. Which is it? Who knows? I rather suspect that EBM is too simple or too complex for them depending on what they need it to be in order to justify their disdain for EBM and their support for the pseudoscience of “orthomolecular medicine.” In any case, just as we don’t need to invoke pseudoscience and quackery to provide patients with the “human touch” while providing care, similarly we don’t need to use “pattern recognition” of the kind that Hickey and Roberts apparently mean (i.e., whatever seems to support their use of various forms of CAM) in order to improve the fineness with which we tailor our treatments to patients based on EBM. In fact, with the new era of genomic medicine, what we are now faced with is a flood of information whose application to patients is proving to be exceedingly difficult. How would Hickey and Roberts deal with this problem? They don’t say, probably because providing real solutions is not their intent. Attacking EBM is, because EBM stands in the way of their practicing pseudomedicine.

Hickey and Roberts conclude:

Personalized, ecological, and nutritional (orthomolecular) medicines are converging on a truly scientific approach. We are entering a new understanding of medical science, according to which the holistic approach is directly supported by systems science. Orthomolecular medicine, far from being marginalized as “alternative,” may soon become recognized as the ultimate rational medical methodology. That is more than can be said for EBM.

I’ve lost track of how many times I’ve seen this claim, which suggests to me that early 2012 might well be the time to address it in depth. After all, practitioners as diverse as Hickey and Roberts and even Stanislaw Burzynski himself make it, and, although I started to address such claims when discussing Burzynski, I need to do a more general post about this. The specific claim needs a bit of discussion, perhaps in a “part III” of this series, is that somehow CAM is “personalized” and akin to new findings in systems biology. The co-opting of systems biology by woo has been a personal sore point with me for a while and Burzynski’s “personalized gene-targeted cancer therapy” just brought to the fore again last month.

In the meantime, I’ll conclude by pointing out that attacks on EBM/SBM by CAM apologists serve multiple purposes. Because CAM practitioners can’t provide strong evidence of efficacy, they have to attack the system of science that shows their woo doesn’t work. In addition, the claims of extreme “individualization” as compared to EBM serve the purpose of providing a convenient excuse to use when CAM fails when tested scientifically. After all, have you ever seen a CAM proponent complain that a positive trial of CAM was not adequately “individualized” and therefore is invalid? Of course not! “Individualization” is only invoked when convenient, to explain failure. Then the claims of extreme “individualization” serve marketing purposes, catering to the desire that people have of wanting to feel as though they are special and unique, and stroking their egos, portraying following EBM guidelines as somehow being akin to being a mindless follower as opposed “thinking for themselves.” The problem is, this individualization isn’t individualization based on science and a better understanding of individual biology. It’s an “individualization” that means “making it up as you go along.” EBM has its deficiencies, and we’ve discussed them frequently on this blog, but in the end I’d take it any day over the faux “individualization” promoted by the Hickey and Roberts and their fellow travelers.