There are many mental pitfalls and logical stumbling blocks faced by healthcare professionals when attempting to untangle the complex web of patient history and physical exam findings. They can impede our ability to practice high quality medicine at every step in the process, interfering with our ability to establish an accurate diagnosis and to provide comfort or cure. And we are all susceptible, even the most intelligent and experienced among us. In fact, having more intelligence and experience may even enlarge our bias blind spots.

Steven Novella discussed the complexities of clinical decision making in early 2013, specifically tackling some of the more common ways that physicians can come to a faulty conclusion in the third installment of the series. One cognitive bias yet to be specifically addressed on the pages of Science-Based Medicine, and it is one that I encounter regularly in practice, is outcome bias. Simply put, outcome bias in medicine occurs when the assessment of the quality of a clinical decision, such as the ordering of a particular test or treatment, is affected by knowledge of the outcome of that decision. We are prone to assigning more positive significance to a decision when the outcome is positive, and we often react more harshly when the outcome is negative. This bias is particularly obvious when the result of a decision largely comes down to chance.

I see outcome bias rear its ugly head in two contexts for the most part: the Lucky Catch and the Bad Call.

The Lucky Catch

From Scrubs, season 8, episode 6, “My Cookie Pants”:

JD: I don’t know who you think you are. But I promise you, you are gonna regret this mistake for the rest of your –

Nurse: Sorry to interrupt. Great call ordering that endoscopy, Dr. Mahoney. Mr. Lawton has stomach cancer.JD: Jo, I’m sorry. I thought you were being callous with Mr. Lawton, and you were just being thorough.

Most doctors have their own personal lucky catch or “great call” story, or at least can easily recount one that was passed down to them at some point in their career. A patient presents with an unusual symptom or syndrome, and in a seeming flash of inspiration a particular lab or imaging modality is requested that reveals the rare or unlikely diagnosis. What tends to follow is some degree of awe at the clinical acumen of the ordering physician, and in some cases more than a bit of hindsight bias. (“Well of course that’s what the guy had! The clues were right in front of them the whole time.”)

In reality, medical mystery-type lucky catches like this are the exception rather than the norm. The much more common version, but one less likely to achieve legend status in a physician’s personal narrative, tends to occur when we’ve dusted off the diagnostic shotgun. Shotguns disperse multiple pellets in a wide pattern in order to increase the likelihood of making contact with the target. So does an ordering physician when they request a large number of tests thoughtlessly.

Sometimes, depending on the severity of the patient’s illness, the diagnostic shotgun is a necessary tool in our armamentarium. A critically ill patient unable to provide a personal medical history, for example, often deserves a more substantial initial work-up. A rapidly-approaching death may preclude a tiered approach to testing. More often than not, however, the shotgun approach is unhelpful and merely increases the chance of mistakenly assuming that a false positive finding is playing a role in the patient’s illness. It can also result in an incidental but real finding that exposes a patient to the risks of additional testing and treatment for something that may have never been a problem. And yes, sometimes a shotgun approach uncovers a surprising cause of the patient’s complaints.

Outcome bias kicks in when we look back at the decisions that occurred prior to the lucky catch or positive outcome and judge them more positively, even when the care in question was of poor quality. We often forget or even fail to acknowledge when a test or treatment had an unfavorable risk versus benefit ratio. This bias can reinforce the drive to perform more unnecessary testing in the future, not only by the directly-involved parties but also by any impressionable learners hearing the tale in the years to come.

The Bad Call

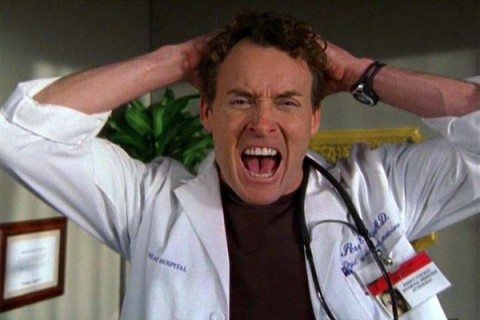

From Scrubs, season 5, episode 20, “My Lunch” (from 1:00):

JD: There’s no way you could have seen that coming. I mean, rabies? Come on, there’s, like, three reported cases a year. In fact testing for it would have been irresponsible. You would have wasted time those people didn’t have.

Dr. Cox: I was obsessed with getting those organs.

JD: You had to be. The fact is, those people were going to die in a number of hours and you had to make a call. I would have made the same call.

….

Dr. Cox: Aw, come on. Come on, Come on! GOD! GOD, GOD!

(Dr. Cox throws the paddles at the defibrillator and smashes the machine against the wall. Carla and the other nurses clean up and exit. Mr. Bradford’s body vanishes and Dr. Cox is left standing alone. J.D. enters.)

Dr. Cox: He wasn’t about to die, was he, Newbie? Could have waited another month for a kidney.

(Dr. Cox exits the room and removes his gloves.)

JD: Where are you going? Your shift’s not over. Hey! Remember what you told me? The second you start blaming yourself for people’s deaths…there’s no coming back.

Dr. Cox: (faces JD) Yeah…you’re right.

Okay, that was a bit melodramatic. It is a TV show after all. But outcome bias can also lead physicians to judge their own decisions, or the decisions of others, more harshly when a bad outcome occurs. It can even result in being less likely to recommend appropriate science-based tests or treatments in the future. When a pharmaceutical agent or surgery is indicated, even strongly recommended, it will never be without some degree of risk but it can be very difficult to separate the decision from the outcome in a rational way when a patient has suffered.

It’s easy to be hard on ourselves, but even easier to be hard on other physicians that we don’t have a personal relationship with. Jousting is a term that in a medical context occurs when one physician speaks poorly of another, or in some way implies that a decision made by another caregiver was inappropriate. It is very hard to avoid jousting because it often happens without us even realizing we are doing it. One doesn’t have to say that Dr. A is an idiot in a “Dr. House”-style rant to effectively undermine a fellow doctor and increase the risk of both parties being involved in malpractice litigation. Offhand comments can be just as effective. (“Oh, I’ve never heard of that approach to this condition.” or “I can’t believe something wasn’t done sooner.”)

Another cognitive hiccup that can add to the impact of outcome bias when evaluating the decisions of other healthcare professionals is the fundamental attribution error. When a negative outcome is known, we tend to immediately blame some internal factor in the involved physician – incompetence or intellectual laziness, for example – and we downplay or completely ignore situational or external factors that were out of their control and may have played a role. We are quick to blame a surgeon for a complication, for example, even when there may be a known rate for that complication that is not affected by procedural skill.

Conclusion

Some days it seems that the deck is stacked against us. Outcome bias and the fundamental attribution error are just two of many biases that can alter our perception of reality. In the case of outcome bias, it not only can affect how we judge our own actions but also the actions of others. And as if that weren’t enough, it has the potential to change how we practice medicine in the future because of our flawed assessments of prior decisions. As always, the key to combating errors in thinking like these is knowing that they exist in the first place. With that knowledge in place, when we are called upon to review the management performed by a peer, or reflect on our own decisions, these biases should be easier to reduce or maybe even avoid altogether.