When we refer to “science-based medicine” (SBM), it is a very conscious choice to emphasize that good medicine should be based on a solid foundation of science. The name was coined to contrast the difference between the current evidence-based medicine (EBM) paradigm, which fetishizes randomized clinical trial evidence above all else and frequently ignores prior plausibility based on well-established basic science, and the SBM paradigm, which takes prior plausibility into account. The purpose of this post will not be to resurrect old discussions on these differences, but before I attend to the study at hand I bring this up to emphasize that progress in science-based medicine requires progress in science. That means all levels of biological (and even non-biological) basic science, which forms the foundation upon which translational science and clinical trials can be built. Without a robust pipeline of basic science progress upon which to base translational research and clinical trials, progress in SBM will slow and even grind to a halt.

That’s why, in the U.S., the National Institutes of Health (NIH) is so critical. The NIH funds large amounts of biomedical research each year, which means that what the NIH will and will not fund can’t help but have a profound effect shaping the pipeline of the basic and preclinical research that ultimately leads to new treatments and cures. Moreover, NIH funding has a profound effect on the careers of biomedical researchers and clinician-scientists, as having the “gold standard” NIH grant known as the R01 is viewed as a prerequisite for tenure and promotion in many universities and academic medical centers. Certainly this is the case for basic scientists; for clinician-scientists, having an R01 is certainly highly prestigious, but less of a career-killer if an investigator is unable to secure one. That’s why NIH funding levels and how hard (or easy) it is to secure an NIH grant, particularly an R01, are perennial obsessions among those of us in the biomedical research field. It can’t be otherwise, given the centrality of the NIH to research in the U.S.

It’s also why the current hostile NIH funding environment, with pay lines routinely in the range of the 7th percentile, has brought this issue to the fore once again, and when NIH funding levels come to the fore, inevitably the topic of the peer review of NIH grants comes to the fore with it. The system by which NIH grants are reviewed involves what is known as a study section, which consists of scientists with (hopefully) the relevant expertise to evaluate the grants submitted, who all read and review a certain number of grants. They then meet, usually in Bethesda but increasingly more often by video conference, to discuss and score the proposals. Having participated in a number of NIH study section meetings as an ad hoc reviewer, I have some appreciation for the process, which sometimes involves a lot of contentious discussion and other times is amazingly cordial.

Regular readers know that many of us here at SBM are great admirers of John Ioannidis, who is best known for an analysis he published several years ago entitled Why most published research findings are false. Personally, I’ve commented on a couple of other of Ioannidis’ publications, including an analysis of the life cycle of translational research (hint: it takes a loooong time for an idea to make it through basic science studies to clinical trials to become an accepted therapy). This time around, Ioannidis has published, with co-author Joshua M. Nicholson, a commentary in Nature entitled Research Grants: Conform and Be Funded, which is about the very issue of which sorts of grants the NIH funds. As is often the case, Ioannidis is provocative in making his point. As is less often the case, I’m not entirely sure he’s on-base here.

An NIH study section evaluating grants. I’ve sat in a hotel conference room very much like this before when I served as an ad hoc reviewer for a study section.

The issue of whether the NIH supports “safe” and “unimaginative” science is something that scientists have been debating since long before I ever got into the business. It’s a question that particularly comes up during harsh funding times (like now). Back when I was in graduate school 20 years ago, the NIH was in another “bust” phase of a boom-or-bust cycle, and pay lines were as tight as they are now. Well do I remember seeing two different tenured professors forced to shut their labs down because they could no longer secure funding after successfully having done so for a long time before that. In any case, it makes a lot of sense that during tight funding times the NIH would become more conservative in what it funds. After all, when money’s tight you don’t want to risk wasting it. During such times, study sections have been observed to require more and more preliminary data for grant applications and be more and more critical of ideas that don’t look on the surface like a “slam dunk.” A measure of arbitrariness to the funding also sets in. After all, the process isn’t so objective that there is a clear difference between a grant scoring in the 5th percentile (fundable) and the 10th percentile (probably not fundable).

Ioannidis, as he frequently tries to do with many things, tries to quantify the level of conservativeness and conformity of the NIH review process, introducing the concept this way:

The NIH has unquestionably propelled numerous medical advances and scientific breakthroughs, and its funding makes much of today’s scientific research possible1.

However, concern is growing in the scientific community that funding systems based on peer review, such as those currently used by the NIH, encourage conformity if not mediocrity, and that such systems may ignore truly innovative thinkers2, 3, 4. One tantalizing question is whether biomedical researchers who do the most influential scientific work get funded by the NIH.

The influence of scientific work is difficult to measure, and one might have to wait a long time to understand it5. One proxy measurement is the number of citations that scientific publications receive6. Using citation metrics to appraise scientists and their work has many pitfalls7, and ranking people on the basis of modest differences in metrics is precarious. However, one uncontestable fact is that highly cited papers (and thus their authors) have had a major influence, for whatever reason, on the evolution of scientific debate and on the practice of science.

So basically, you can see where this is going. Ioannidis is going to try to analyze whether the most “influential” scientists are NIH-funded. One can quibble about whether the most cited papers are truly the most “influential,” of course. After all, sometimes the most cited papers are influential in a bad way or are cited as an example of a paradigm that was later rejected. However, there aren’t any really good ways of measuring a scientist’s influence; citations are probably about as useful a way as one can come up with. At least it’s an objective number that can be measured. After his analysis, Ioannidis also makes some interesting, although perhaps unworkable suggestions for improving the process.

First, let’s look at the key finding of the entire exercise. Nicholson and Ioannidis first identified 700 papers in biomedical research journals published since 2001 that have received 1,000 or more citations. They then examined the record of the primary author of those papers. It’s fairly amazing what a select group this is. There were more than 20 million papers published worldwide between 2001 and 2012, of which only 1,380 had received 1,000 or more citations. Of these, 700 were catalogued in the life or health sciences and had an author affiliation in the U.S. These 700 papers produced 1,172 discrete single, first, or last authors. The reason Ioannidis concentrated on these authors is because in biomedical research publications, the first author is usually the one who did most of the work and wrote the paper, while the last author is usually the principal investigator (PI); i.e., the researcher in whose laboratory the research was carried out. Frequently, therefore, the first author is a graduate student or postdoctoral fellow working in the laboratory of the PI.

So what were the results? Because I’m going on vacation and feeling a little lazy right now, I’ll let Ioannidis describe it:

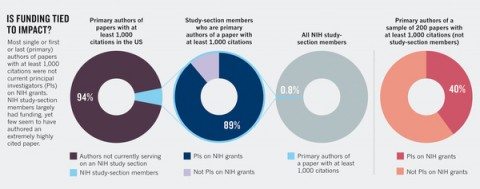

We discovered that serving on a study section is not necessarily tied to impact in the scientific literature. (see ‘Is funding tied to impact?). When we cross-checked the NIH study-section rosters against the list of 1,172 authors of highly cited papers, we found only 72 US-based authors who between them had published 84 eligible articles with 1,000 or more citations each and who were current members of an NIH study section. These 72 authors comprised 0.8% of the 8,517 study-section members. Most of the 72 (n = 64, 88.9%) currently received NIH funding.

We then randomly selected 200 eligible life- and health-science papers with 1,000 or more citations (analysing all 700 would have required intensive effort and yielded no extra information in terms of statistical efficiency). We excluded those in which the single, first or last author was a member of an NIH study section, and those in which the single or both the first and last author were not located in the United States on the basis of their affiliations at the time of publication. This generated a group of 158 articles with 262 eligible US authors who did not participate in NIH study sections. Only the minority (n = 104, 39.7%) of these 262 authors received current NIH funding.

These data are presented in graphical form in the article:

So the basic finding is that researchers viewed as “highly influential” are often not on NIH study sections. Ioannidis notes that these rates of NIH funding are not any higher and might be worse than biomedical scientists in general. Ioannidis cites data that suggests that between 24% and 37% of biomedical researchers applying for grants from 2001 to 2011 were funded as principal investigators. True, Ioannidis points out that the funding rates for individual grants are considerably lower (after all, the pay lines over the last few years have been in the 7th percentile range) and that researchers submit multiple grants, resulting in a significant number of researchers ultimately receiving NIH funding. There is one problem using this particular range, however. The early part of the range is very different from the more recent part of the range as far as pay lines go. from 1998 to 2003, the NIH budget nearly doubled, the result of an initiative started under the Clinton Administration and completed under the Bush Administration. Truly, back then it was the land of milk and honey for researchers trying to compete for NIH grants, with pay lines well over the 20th percentile in some institutes. Then, after the doubling ended in 2003, the NIH came in for what has since become known as a “hard landing.” Budget increases did not keep up with inflation. Also, because grants funded during the time of the doubling could be as long as 5 years, the commitments from those grants funded during the time of the doubling remained for several years after the large budget increases ended, leaving less money for new grants. Thus, this period encompasses a “time of plenty” that lasted until around 2003 and 2004 and the current drought, which got really severe after around 2006. Despite all sorts of moves by the NIH to increase the number of new grants, such as cutting the budgets of existing grants and newly awarded grants, the current situation shows no signs of abating any time soon. All of this is why I would be curious if Ioannidis’ estimate still holds for the period from, say, 2006 to 2011 as it did in the earlier time period from 2001 to 2005.

Whether it does or not, the finding remains that there doesn’t seem to be a discernable difference between the NIH funding rates between these highly cited scientists and the rest of us hoi polloi, which does rather suggest that the current NIH system isn’t identifying the truly best and brightest. At least, this is what Ioannidis argues. First, however, he also points out another interesting observation. Study section members and non-members showed no significant difference in their total number of highly cited papers:

Among authors of extremely highly cited papers, study-section members and non-members showed no significant difference in their total number of highly cited papers, despite the fact that members of study sections were significantly more likely than non-members to have current NIH funding. This was true both for authors with multiple highly cited papers (13/13 versus 13/19, p = 0.024) and for those with a single eligible highly cited paper (51/59 versus 91/243, p < 0.0001) and overall in a stratified analysis (p < 0.0001).

It’s important to clarify here. There is a reason why study section members are significantly more likely to have NIH funding, and that’s because the NIH invites holders of NIH grants, particularly R01s, to join study sections, and many do. Indeed, among the requirements for study section members is that they must be a principal investigator “on a research project comparable to those being reviewed.” In other words, to be an official standing member of a study section, in general you have to have an NIH grant, usually an R01 or larger. The rest of the study section is then rounded out with a rotating band of ad hoc reviewers picked for specific areas of expertise. That’s the point, and that’s why Ioannidis mentions this. The point is that being on a study section or holding an NIH grant has no correlation with being one of these highly cited scientists.

Another observation made by Ioannidis using the similarity or “match” score on the NIH rePORTER website, where you can find listings of all federally funded research projects, is that the grants of study section members were more similar to other currently funded grants than were non-members’ grants. In other words, the funded grants of members of NIH study sections resemble each other and grants in general funded by the NIH. I can certainly guess why this might be true. One of the pieces of advice I received when I was starting out (and that is given to lots of young investigators) is to find a way to get on a study section. The rationale is that by learning how the NIH evaluates grants you can learn how to craft grants more likely to be funded. It therefore makes sense that a certain level of conformity creeps in, and there’s little doubt that that conformity becomes more pronounced when funding is tight. Study sections and the NIH do not want to “waste” taxpayer’s money on risky projects.

Or, as Ioannidis puts it:

If NIH study-section members are well-funded but not substantially cited, this could suggest a double problem: not only do the most highly cited authors not get funded, but worse, those who influence the funding process are not among those who drive the scientific literature. We thus examined a random sample of 100 NIH study-section members. Not surprisingly, 83% were currently funded by the NIH. The citation impact of the 100 NIH study-section members was usually good or very good, but not exceptional: the most highly cited paper they had ever published as single, first or last author had received a median of 136 (90–229) citations and most were already mid- or late-career researchers (80% were associate or full professors). Only 1 of the 100 had ever published a paper with 1,000 or more citations as single, first or last author (see Appendix 1 of Supplementary Information for additional citation metrics).

And, from a news report about the Ioannidis’ study:

Top scientists are familiar with NIH’s penchant for the safe and incremental. Years ago, biologist Mario Capecchi of the University of Utah applied for NIH funding for a genetics study with three parts. The study section liked two of them but said the third would not work.

Capecchi got the grant and put all the money into the part the reviewers discouraged. “If nothing happened, I’d be sweeping floors now,” he said. Instead, he discovered how to disable specific genes in animals and shared the 2007 Nobel Prize for medicine for it.

Although I think Ioannidis definitely has a point, anecdotes like this are rife in the research world. Usually, they take the form of scientists with ideas that they couldn’t persuade the NIH to fund despite multiple grant applications but that later turned out to be revolutionary or to lead to highly useful new therapies. The most common such anecdote that I hear is that of Dennis Slamon, who proposed targeting the HER2 oncogene in breast cancer. I’ve touched on his saga before, along with the whole issue of supporting risky science versus safer, more incremental science. It’s the issue of “going for the bunt versus swinging for the fences.” Basically Slamon likes to go on and on about he had trouble getting NIH funding to develop a humanized monoclonal antibody against HER2, but, as I pointed out, the story was actually a lot more complex than that. For instance, Slamon’s applications were submitted around a time when other scientists were having difficulty replicating their results, and very likely study section members knew that. Also, Slamon had no trouble getting NIH funding for other projects at the time.

To me, these anecdotes represent the scientific variant of the mad scientist in horror movies ranting, “They thought me mad, mad, I tell you! But I’ll show them.” OK, I’m being a bit sarcastic, but these stories are so ubiquitous whenever anyone complains about the “conformity” and conservativeness of the NIH review process that I tend to want to gag every time I read one of them. They’re pure confirmation bias. Yes, occasionally the daring or bizarre idea will pay off. However, far more often risky ideas do not pan out because, well, they are risky. Most risky ideas fail. It’s very easy to recognize innovative ideas with a high potential for impact in retrospect. With the benefit of hindsight, we now know that Slamon and Capecchi have made huge contributions to science. At the time they were doing their seminal work, it wasn’t nearly as obvious. Both Slamon and Capecchi got lucky. Such is the fate of riskier ideas that it could just as easily have gone the other way and their big ideas gone nowhere.

But back to Ioannidis. I see a lot of problems with his analysis, not the least of which is his metric. For one thing, in some specialized fields, even papers with a very high impact would have difficulty reaching 1,000 citations, because there just aren’t enough scientists working in that field to produce such blockbusters. Another issue is consistency. Truly influential and creative scientists tend to produce multiple influential papers, and even an average scientist can stumble onto something. Even Ioannidis concedes that “one cannot assume that investigators who have authored highly cited papers will continue to do equally influential work in the future.” Indeed, how many of these papers analyzed by Ioannidis were one-shot papers in which the scientists who published them never published papers anywhere near as influential again? To be fair, Ioannidis does make a good point that such investigators have reached a bar that should entitle them to a chance to prove that they can keep doing such good work.

Finally, as NIH Director Francis Collins pointed out, scientists funded by the NIH have won 135 Nobel Prizes. The situation is not as clearly a problem as Ioannidis makes it sound. Besides, it is the very nature of science that “game changing” studies tend to be relatively few and far between. Most of the hard work of advancing science does come from incremental work, in which scientists build upon what has come before. We fetishize the “brave mavericks” and “geniuses,” and, yes, they are important, but identifying these geniuses at the time they are doing their work is not a trivial thing. Often the importance of their ideas and work is only appreciated in retrospect.

In the end, as much as I admire Ioannidis, I think he’s off-base here. It’s not that I don’t agree that the NIH should try to find ways to fund more innovative research. However, Ioannidis’ approach to quantifying the problem seems to suffer from flaws in its very conception. In light of that, I can’t resist revisiting the discussion in my last post on the question of riskiness versus safety in research, and that’s a simple question: What’s the evidence that funding more risky research will result in better research and more treatments? We have lots of anecdotes of scientists whose ideas were later found to be validated and potentially game-changing who couldn’t get NIH funding, but how often does this really happen? As I’ve pointed out before, the vast majority of “wild” ideas are considered “wild” precisely because they are new and there is little good support for them. Once evidence accumulates to support them, they are no longer considered quite so “wild.” We know today that the scientists whose anecdotes of woe describing the depredations of the NIH were indeed onto something. How many more proposed ideas that seemed innovative at the time but ultimately went nowhere?

Ioannidis does bring up a disturbing point, namely that scientists who have authored highly influential papers are apparently no more likely to achieve NIH funding than the rest of us and, more relevant, that scientists on NIH study sections differ from those not on study sections only in their ability to persuade the NIH to fund them. NIH study sections are a lot of work, and there is also a culture there, with a definite “in” crowd. It is quite possible that the truly innovative thinkers and scientists don’t want to be bothered with the many hours of work that each study section meeting involves, which can require members to review five to ten large grants and then travel to Bethesda, and it is equally possible that such scientists “don’t play well with others” in the study section. It is certainly worthwhile to investigate whether this is the case and then to try to find a way to bring such creative minds into the grant review process. However, the assumption underlying Ioannidis’s analysis seems to be that there must be “bolts out of the blue” discovered by brilliant brave maverick scientists. It’s all very Randian at its heart. However, science is a collaborative enterprise, in which each scientist builds incrementally on the work of his or her predecessors. Bolts out of the blue are a good thing, but we can’t count on them, nor has anyone demonstrated that they are more likely to occur if the NIH funds “riskier research.” It’s equally likely that the end result would be a lot more dud research.

No one can say, and that’s the point.