You can lead a true believer to facts, but can you make him think?

I got an e-mail with a link to a video featuring “Dr.” Leonard Coldwell, a naturopath who has been characterized on RationalWiki as a scammer and all-round mountebank. Here are just a few examples of his claims in that video:

- Every cancer can be cured in 2-16 weeks.

- The second you are alkaline, the cancer already stops. A pH of 7.36 is ideal; 7.5 is best during the healing phase. [We are all alkaline. Normal pH is 7.35-7.45.]

- IV vitamin C makes tumors disappear in a couple of days.

- Very often table salt is 1/3 glass, 1/3 sand, and 1/3 salt. The glass and sand scratch the lining of the arteries, they bleed, and cholesterol is deposited there to stop the bleeding.

- Patients in burn units get 20-25 hard-boiled eggs a day because only cholesterol can rebuild healthy cells; 87% of a cell is built on cholesterol.

- Medical doctors have the shortest lifespan: 56. [Actually they live longer than average.]

My correspondent recognized that this video was dangerous charlatanism that could lead to harm for vulnerable patients. He called it a “train wreck, with fantasy piled upon idiocy.” His question was about the best way to convince someone that it was insane. He said, “If you could rely on someone to follow and understand basic information about the relevant claims, it would be a gimme. But to the casual disinterested observer, who can interpret the whole video as ‘Well, he just wants people to eat right,’ pointing out the individual bits of lunacy just looks like so much negativity.”

He asked, “How do I best represent what’s happening to someone who is either a) emotionally invested in this and/or b) casually approving of it? … I just want to be patient, not shout anyone down, not make anyone defensive, and then win. Very surprised I don’t already know how. But I feel like I don’t. What is the psychologically sophisticated approach to this?”

Here’s how I answered him

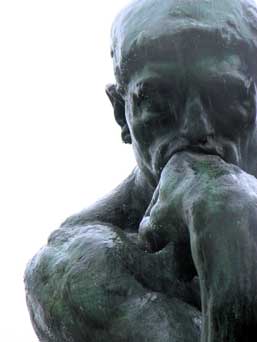

You can’t change someone else’s mind; they have to change their own mind. The sophisticated approach is to ask them questions that lead them to doubt, and gently lead them to discover the truth for themselves. That is something Socrates was very good at; I’m not. But I can suggest some questions to ask. Maybe start with some kind of validation: Wow, that really sounds good; I can see why you’re impressed, but there are some things I’m wondering about…

If alkalinization works so well to eliminate cancer, why do you suppose he bothers to recommend a lot of other ways to cure cancer, with vitamin C, oxygen, a vegan diet, etc? Why do you suppose oncologists don’t offer any of those treatments to their patients? How likely is it that this one man is right and the entire scientific medical community is wrong about everything from salt to vitamins to cholesterol? How do you think he arrived at those conclusions? Have you ever seen a video that you knew was wrong about anything? How could you go about finding out if anything in this one was inaccurate? Have you read what other doctors have had to say about the alkaline diet? Is there a way you could verify his claims through other sources? Would it be worthwhile to look for the scientific evidence he cites? If you think “he just wants people to eat right,” don’t you think all those people who disagree with him also want people to eat right? If you get cancer and try the alkaline diet and it hasn’t eliminated the cancer as promised in two days, what will you think? What will you do?

Do you believe that “very often, table salt is one-third glass, one-third sand, and one-third salt”? Did you know that the FDA requires that all US table salt be at least 97.5% pure sodium chloride, and it is usually much purer? We can find out for ourselves. Let’s do a little experiment. Glass and sand don’t dissolve in water. Put some table salt in a glass of water. The salt will dissolve and any impurities will sink to the bottom and form a visible sediment. Do you see a residue of sand and glass particles in the bottom?

How could sand and glass in the diet get into the arteries and scratch them? Aren’t insoluble materials indigestible? Don’t they pass through the digestive system and end up in the toilet?

My “SkepDoc’s Rule” is before you accept a claim, try to find out who disagrees with it and why. There are almost always two sides, and it quickly becomes obvious which side is supported by the best evidence and reasoning. If your interlocutor refuses to even look at any contradictory evidence, there is no point in continuing the discussion.

Don’t expect to “win.” True believers are impervious to evidence and reason. The best you can hope to do is plant a seed. Sometimes it takes many seeds of doubt, planted and watered by many different sources over the years, to finally have an effect.

Further thoughts

Since I wrote that, I’ve learned more about how to talk to true believers, and I’ve been persuaded that the task is not as hopeless as I thought. I heard philosopher and educator Peter Boghossian speak at a conference and was intrigued enough to seek out and read his book, A Manual for Creating Atheists, where he describes a method to engage the faithful in conversations that will help them question how certain they can be about the truth of beliefs that are based on faith alone, to help them value reason and evidence over faith, superstition, and irrationality. Instead of discussing specific beliefs, he tries to get people to analyze how they arrive at beliefs in general. It occurred to me that most of what he says applies to true believers in CAM as well as to true believers in religion. Not everyone wants to create atheists, but I hope we all want to create critical thinkers.

Questioning epistemology, not specific beliefs

Rather than questioning specific beliefs, Boghossian questions epistemology: the study of knowledge, how we come to know what we know, how we decide whether to accept a belief as true. His approach is based on the Socratic method of asking questions that get his interlocutors to think more clearly. You can see Boghossian’s methods in action in a series of YouTube videos by Anthony Magnabosco. He does “street epistemology,” asking random people how they arrived at their God belief, and if they are using a possibly unreliable method to arrive there, trying to help them discover that.

How do we know what we know? In the Tuyuca language spoken by a tribe in the Amazon rain forest, to say that someone is chopping trees requires that one also specify how one knows this.1 If you hear that someone is chopping trees, then you say: Kiti-gï tii gí where gí serves to indicate that this is something you hear. But if you actually see him chopping trees, then you say Kiti-gï tii-í where the í indicates your having seen rather than heard it. If you have not actually perceived him chopping trees but have reason to suppose that he is doing so, then the sentence is Kiti-gí tii-hXi;2 if your source of information about the tree chopping is hearsay, then this requires a special marker: Kiti-gï tii-yigï. It would be helpful if English specified the source of knowledge; our grammar doesn’t do it, but we can specify it using other words.

Boghossian gives five reasons why people embrace absurd propositions:

- They have a history of not formulating their beliefs on the basis of evidence.

- They formulate their beliefs on what they thought was reliable evidence but wasn’t.

- They have never been exposed to competing epistemologies and beliefs.

- They yield to social pressures.

- They devalue truth or are relativists.

All of these are applicable to CAM.

True believers do change their minds. Plenty of people have converted from one religion to another or rejected religious beliefs entirely; plenty of believers in CAM have come to reject it in favor of science. Swift said, “You do not reason a man out of something he was not reasoned into.” But people have been reasoned out of religious beliefs, including many ex-preachers. How can we best assist that process?

The Socratic method

The Socratic method has five stages:

- Wonder (I wonder if there is intelligent life on other planets)

- Hypothesis (There must be, since the Universe is so large)

- Elenchus (Q&A) – facilitator generates ideas about what might make the hypothesis untrue. (What if we’re the first? Or the last?)

- Accept or revise hypothesis (There probably is, but maybe not)

- Act accordingly (Stop saying you are certain there must be.)

For a CAM belief:

- I wonder if tea tree oil will cure my toenail fungus

- I know it will work, because it worked for my cousin

- But what if it would have cleared up on its own without treatment? What if your cousin didn’t actually have a fungus? What if a controlled study had shown tea tree oil wasn’t effective?

- I can’t know for sure whether it works, but I’m going to try it.

- If your toenail clears up, don’t tell people you have proved that it works.

Implementing the Socratic method for CAM

It helps to establish a friendly, trusting relationship with your interlocutor. Try to establish some things you have in common. Show an interest in his beliefs; try to understand exactly what it is that he believes and how he arrived at the beliefs. Say, “Tell me more about that.” Validate his experience. Don’t assume he is wrong. Assume he might be right, and show that you are willing to change your own beliefs if provided sufficient evidence. This should not be an adversarial relationship. Remember that you can learn from anyone; everyone you meet knows something you don’t know.

Don’t talk about facts. If the person didn’t form his belief because of facts, he won’t change his mind because of facts. The idea is not to change beliefs, but to change the way people form beliefs. Try to avoid arguing about the belief itself and concentrate on how the belief was arrived at and how one can best arrive at beliefs that are true. Do other people ever arrive at beliefs that are wrong? How does that happen? Ask your interlocutor to think of examples of false beliefs that other people hold, and talk about where they went wrong. Ask him to rate on a numerical scale how confident he is that his beliefs are true. Magnabosco uses a scale of 1-100 where 100 is absolute certainty.

If you can think of a claim that contradicts the believer’s claim, ask how you could go about determining which of two claims is correct. If two believers believe equally strongly in two different religions, they can’t both be right; how could you go about determining which one is right? If one CAM claims that all disease is due to subluxations and another claims that all disease is due to disturbances of qi in the meridians, point out that they can’t both be true.

If they claim to have evidence, you might ask, “For all evidence-based beliefs, it’s possible that there could be additional evidence that comes along that could make one change one’s beliefs. What evidence would you need to make you change your mind?”

If your interlocutor bases his belief on something he “felt,” ask how could we differentiate between that kind of feeling and a delusion? (It’s much less likely to be a delusion if the believer is willing to revise the belief.)

Boghossian uses this series of questions in the classroom:

- Is it possible that some people misconstrue reality?

- Do some people misconstrue reality?

- If one wants to know reality, is one process just as good as any other? (Flipping a coin? Interpreting a dream? Doing a scientific experiment?)

- So then are some processes worse and some better?

- Is there a way we can figure out which processes are good and which are not?

Most students quickly figure out that processes that rely on reason and evidence are more reliable than other processes.

Understand that some people are epistemological victims who have never encountered competing ways of understanding reality. Some religious believers have been deliberately isolated and protected from exposure to any information outside their religious tradition. For CAM, even some doctors and scientists fail to truly understand how the scientific method works and how important it is. CAM advocates seek out like-minded people. Internet “filter bubbles” deliver customized information so people are not likely to find contradictory or disconfirming information by googling.

Surely it is worthwhile trying to help people think more clearly. Think of it as an intervention, like deprogramming a cult member. These are people who need your help. Certainty is the enemy of truth. It removes curiosity and stifles investigation. Susan Jacoby said “I think of the first atheist as someone who, while grieving over the death of a child in ancient times, refused to say, ‘It’s the will of the gods,’ and instead began searching for a natural rather than a supernatural explanation.” If everyone had believed homeopathy was the cure for all disease, antibiotics would never have been discovered. Reality is always a better guide to life than fantasy and error.

Boghossian cites another author who calls religion a kind of virus that infects people, spreads to others, programs the host to replicate the virus, creates antibodies or defenses against other viruses, takes over certain mental and physical functions and hides itself within the individual in such a way that it is not detectable by the individual. In that sense, belief in CAM is also a virus.

It’s cruel to take away people’s cherished beliefs without offering a replacement that offers hope for a better life. Boghossian has proposals for helping religious believers who have given up their belief but are devastated by the loss of their community and need a new source of support. For CAM, we can offer a truly effective way of determining whether a treatment works, the scientific method; and we can direct them to credible sources of information and empower them to tell for themselves whether something is science and pseudoscience. As Bill Nye says, “Science rules!” It doesn’t always provide certainty, but isn’t uncertainty preferable to false certainty? Science is fascinating and exciting and full of wonders and it has the added advantage of being true.

If you fail

If you think your intervention has been a failure, you may be wrong. If believers get angry or seem to become even more entrenched in their beliefs, it may be that they are already experiencing doubts. They may have become more self-aware. Boghossian says, “They may have said things or taken positions to justify the beliefs to themselves.” You may have made a small dent in their certainty, and dents may set the stage for a later breakthrough.

The final comeback

True believers often end with these final arguments:

- “It’s true for me.”

- Even if it isn’t true, it helps people (by providing hope, motivation, etc.)

At this point, you can ask if people are really helped by forming false beliefs.

Another skeptic’s approach to a true believer

Benjamin Radford’s recent article in Skeptical Inquirer3 also offers some very constructive advice. He describes his response to a woman who believes she has been cursed and asks him how to remove the curse. He sympathizes with her and assures her that he is concerned about her welfare. He validates her experience that “something” is going on. He plants a seed of doubt and offers an alternate, non-threatening explanation for what she experienced. He demonstrates open-mindedness. He gives her accurate information about psychic scams. He establishes his bona fides as an expert on the subject, focusing on the psychology of curse beliefs. He explains that all curses are fundamentally the same. He empowers her: he advises her to stop going to psychics, to remember that everyone has some of the same troubles she is attributing to the curse, and to not focus on the negative things but on positive things she can do to help herself.

A final word

I can’t claim that there are any controlled studies to show that this method works to persuade true believers to change their minds or that it is better than any other method. But other methods have few success stories, and in my opinion this sounds like it might be worth trying. It certainly isn’t easy. I recently tried to apply Boghossian’s methods in an e-mail exchange with a woman who holds all kinds of weird beliefs, and I failed miserably. In my experience of many similar discussions, the true believer always eventually makes new claims that are so outrageous that I lose self-control and simply can’t restrain myself from throwing facts at them to show them how wrong they are, and that ends any chance of a productive discussion. I’ll keep trying. I would appreciate hearing from others who have had successes or failures in discussions with true believers.

At any rate, the purpose of SBM is not to convince true believers but to provide accurate information so people whose minds are not irrevocably made up can make informed health decisions. And we have had considerable success doing that.

Notes

- The Power of Babel, by James McWhorter. New York: Henry Holt and Company, 2002. P. 180. Return to text.

- The X stands for a linguistic symbol I was unable to reproduce. Return to text.

- Radford, B. “A skeptic’s guide to ethical and effective curse removal.” Skeptical Inquirer 39:4, p. 26-8. July/August 2015 Return to text.