Alone of all the regular contributors to this blog, I am a surgeon. Specifically, I’m a surgical oncologist specializing in breast cancer surgery, which makes me one of those hyper-specialized docs that are sometimes mocked as not being “real” doctors. Of course, the road to my current practice and research focus was long and involved quite a few years doing general surgery, so it is not as though I am unfamiliar with a wide variety of surgical procedures. Heck, I’m sure I could do an old-fashioned appendectomy, bowel resection, or cholecystectomy if I had to. Just don’t ask me to use the da Vinci robot or, with the exception of the case of a cholecystectomy, a laparoscope, although, given the popularity of robotic surgery, I sometimes joke that I really, really need to figure out how to do breast surgery with the robot. After all, if plastic surgeons are using it for breast reconstruction, surely the cancer surgeon should get in on the action.

Alone of all the regular contributors to this blog, I am a surgeon. Specifically, I’m a surgical oncologist specializing in breast cancer surgery, which makes me one of those hyper-specialized docs that are sometimes mocked as not being “real” doctors. Of course, the road to my current practice and research focus was long and involved quite a few years doing general surgery, so it is not as though I am unfamiliar with a wide variety of surgical procedures. Heck, I’m sure I could do an old-fashioned appendectomy, bowel resection, or cholecystectomy if I had to. Just don’t ask me to use the da Vinci robot or, with the exception of the case of a cholecystectomy, a laparoscope, although, given the popularity of robotic surgery, I sometimes joke that I really, really need to figure out how to do breast surgery with the robot. After all, if plastic surgeons are using it for breast reconstruction, surely the cancer surgeon should get in on the action.

Clinical trials of surgical procedures and placebo controls

I have, however, from time to time addressed the issue of science-based surgery, and this weekend seems like as good a time to do so again, given that I just came across an article in the BMJ reporting a systematic review of the use of placebos in surgical trials. It’s a year old, but worth discussing. Before I get to discussing the nitty-gritty of this particular trial, let me just note that the evaluation of surgical procedures for efficacy and safety tends to be more difficult to accomplish than it is for medications, mainly because it’s much harder to do the gold standard clinical trial for surgical procedures, the double-blind, placebo-controlled randomized clinical trial. The two most problematic aspects of designing such an RCT in surgery, as you might imagine, are the blinding, particularly if it’s a trial of a surgical procedure versus no surgical procedure, and persuading patients to agree. I’ll deal with the latter first, because I have direct personal experience with it.

Way, way back in the Stone Age of my career (around 15 years ago), there was a surgical trial going on known as the NSABP-B32 trial. This was a trial designed to test whether a procedure called sentinel lymph node (SLN) biopsy in breast cancer, which involves using dye (either radioactive or blue or both) to identify the lymph node(s) under the arm most likely to contain cancer. The idea was to determine if a patient had positive lymph nodes without doing the old standard of care, removing all the axillary lymph nodes, a procedure with a high risk of lymphedema (arm swelling due to interruption of lymphatic drainage) and other problems that made evaluating the lymph nodes more complication-prone than the surgical excision of the actual breast cancer. The problem was that we didn’t know how accurate SLN biopsy was or how often it misclassified a woman with node-positive disease as node-negative. So a trial was undertaken in which women were randomized either to (1) SLN biopsy followed by axillary lymph node dissection (removal of all the axillary lymph nodes to compare the results of the SLN biopsy) or (2) SLN biopsy alone, with an axillary lymph node dissection only if the SLN was positive for cancer. The results ultimately demonstrated that overall survival, disease-free survival, and regional control (control of the disease in the axilla) were the same in all groups, and SLN biopsy became the new standard of care in women with early stage, clinically node negative breast cancer, replacing axillary lymph node dissection.

Here’s the issue. Persuading women to be randomized was difficult. Very difficult. Most wanted the newer and less-invasive procedure, the SLN biopsy, because they feared the complication of lymphedema that axillary lymph node dissection produced. A few were risk-averse and not willing to be randomized if they might get just the “new” procedure (at the time, the SLN biopsy). And this was with a trial that had no placebo (i.e., “sham” surgery) arm and was not blinded. Of course, the B-32 trial didn’t need to be blinded because we were examining “hard” outcomes: overall survival (the “hardest” endpoint of all), disease-free survival, and recurrence in the axilla. What about trials that are not examining endpoints like this? I’ve discussed one such example before, vertebroplasty for vertebral fractures resulting from osteoporosis. Vertebroplasty, a widely used procedure to reduce pain from these fractures, basically involves injecting glue into the fracture to stabilize it. Because it wasn’t a full surgical procedure and only involved injecting glue (or not injecting glue), the two vertebroplasty trials I discussed were relatively easy to blind compared to trials of more invasive surgical procedures, and the vertebroplasty trials ultimately showed that “real” vertebroplasty is no better than placebo vertebroplasty.

Then there are the ethical and practical issues as well. For instance, in the case of a surgical procedure involving more extensive rearrangement of anatomy (as I like to put it), a blinded trial would require that at least an incision be made on the patient identical to that of the surgery, but that nothing be done. This presents an ethical dilemma, since clinical trials rely on the principle of clinical equipoise (not knowing which treatment is better or whether the treatment being tested is better than no treatment) and beneficence, which means that risk must be minimized for clinical trial subjects. Indeed, clinical equipoise is often in conflict with scientific rigor in clinical trial design. Also, from a practical standpoint, in a placebo-controlled surgical trial, the surgeons would know which subjects were in each group, which leads to the question of whether the doctors taking care of the patient postoperatively would have to be different than the ones doing the surgery. Still, as the example of the vertebroplasty trials shows, for surgical procedures designed to relieve pain or other symptoms with subjective components, it’s important to include placebo controls in clinical trials.

This latest systematic review just emphasizes this even more. This is particularly true given how prone surgeons are to adopt new procedures before they have been adequately tested, as the example of laparoscopic cholecystectomy demonstrated in the late 1980s and early 1990s and the widespread adoption of robotic surgery for various conditions does today. (I’m sure that by saying that I’ll tick off a surgeon who swears by robotic surgery, but I don’t care.) Unfortunately, placebo-controlled trials of surgery for such conditions remain relatively rare.

The need for more placebo-controlled trials of surgery

The systematic review that spurred this post was conducted by a group that included mainly researchers from the UK (University of Oxford, the University of Southhampton, and the National Institute of Health Research), but also a researcher from the Harvard Stem Cell Institute. The authors first note:

In considering any scientific evaluation, it is important to remember that an outcome of a surgical treatment is a cumulative effect of the three main elements: critical surgical element, placebo effects, and non-specific effects. The critical or crucial surgical element is the component of the surgical procedure that is believed to provide the therapeutic effect and is distinct from aspects of the procedures that are diagnostic or required to access the disease being treated. The placebo effects are related to the patients’ expectation and the “meaning of surgery,” whereas the non-specific effects are caused by fluctuations in symptoms, the clinical course of the disease, regression to the mean, report bias, and consequences of taking part in the trial, including interaction with the surgeons, nurses, and medical staff. It is reasonable to assume that surgery is associated with a placebo effect. Firstly, because invasive procedures have a stronger placebo effect than non-invasive ones and, secondly, because a confident diagnosis and a decisive approach to treatment, typical for surgery, usually results in a strong placebo effect.

I’ve discussed the necessity of a surgeon projecting a “decisive” and confident air to patients before and the elements of placebo effects. In any case, it does appear to be true that the more invasive the treatment, the stronger the placebo effects, which would appear to make consideration of these nonspecific effects even more important in surgery than in medical interventions. Indeed, given that surgical procedures and associated devices tend not to be as strictly regulated as drugs (in fact, surgical procedures themselves are scarcely regulated at all other than by the professional societies encompassing the relevant surgical specialties), rigorous surgical trials are critical, but unfortunately they are uncommon, as noted by the authors:

Placebo controlled randomised clinical trials of surgical interventions are relatively uncommon. Studies published so far have often led to fierce debates on the ethics, feasibility, and role of placebo in surgery. One reason for the poor uptake is that many surgeons, as well as ethicists, have voiced concerns about the safety of patients in the placebo group. Many of the concerns are based on personal opinion, with little supporting evidence. In the absence of any comprehensive information on the use of placebo controls in surgery, and the lack of evidence for harm or benefit of incorporating a placebo intervention, a systematic review of placebo use in surgical trials is warranted.

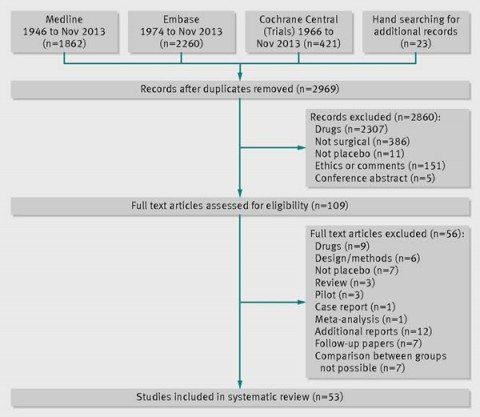

So the authors performed their systematic review. They defined surgical trials as trials testing an interventional procedure that changes the anatomy and requires a skin incision or use of endoscopic techniques. Placebo was defined as a sham surgery or imitation procedure intended to mimic the active intervention in a manner such that the patient could not tell the difference, which generally required that the patients be sedated or under general anesthesia. Examples include inserting an endoscope but doing nothing or making a skin incision. Relevant studies were identified through a search of Medline, Embase, and the Cochrane Central Register of Controlled Trials, followed by independent screening by three of the investigators for the eligibility of each trial to be included. The references were also screened to identify any recently completed or ongoing studies. Duplicates were handled by only examining the publication that reported the main outcome for the trial.

Here is the summary for the selection process:

Overall, 39 of the 53 (74%) included studies were published after 2000. One aspect of these trials is that most of them investigated relatively minor conditions that were not directly life-threatening, such as gastro-esophageal reflux (n=6; 11%). Indeed, nearly half (43%) of the included studies were trials involving endoscopy as part of the investigated procedure. Thirteen trials (25%) used some exogenous material, implant, or tissue, and a further six used balloons. Also:

Most studies reported subjective outcomes such as pain (n=13; 25%), improvement in symptoms or function (n=17; 32%), or quality of life (n=8; 15%). Less than half of the trials (n=22; 42%) reported an objective primary outcome—that is, measures that did not depend on judgment of patients or assessors. The majority of trials were small; the number of randomised participants ranged between 10 and 298, with a median of 60. No placebo controlled surgical trials investigating more invasive surgical procedures such as laparotomy, thoracotomy, craniotomy, or extensive tissue dissection were identified.

So right there we see a bias in the study towards less invasive procedures. This is not surprising, given that it is likely less ethically problematic to propose, for instance, sticking an endoscope into the rectum or stomach of a subject in the placebo-control group and looking around while doing nothing than it is to propose doing a non-therapeutic thoracotomy or laparotomy on a control subject. Be that as it may, it is useful to reference here one of the most famous surgical trials of all that utilized sham surgery, arguably the first that ever did, a randomized clinical trial of internal mammary artery ligation for angina performed in the 1950s. Beginning in the 1930s and continuing until this study, angina pectoris was sometimes treated with a surgical procedure known as mammary artery ligation. The idea was that tying off these arteries would divert more blood to the heart. The operation became popular on the basis of relatively small, uncontrolled case series. Then, two randomized, sham surgery-controlled clinical trials were published in 1959 and 1960. Both of these trials showed no difference between bilateral internal mammary artery ligation and sham surgery. Very rapidly, surgeons stopped doing this operation. More recently, a randomized, double-blind, sham-controlled trial of arthroscopic partial meniscectomy showed the same thing: Arthroscopic repair for a degenerative tear of the medial meniscus produced results indistinguishable from sham surgery. Only time will tell whether this procedure is abandoned.

So what did this review find? First, as one would expect, the authors noted that in 39 of 53 (74%) of the trials, there was improvement in the placebo arm. No surprise there. In 27 (51%) of the trials, there was no difference between the sham surgery and real surgery arms. In the remaining 26 (49%) of the trials, the authors reported that “surgery was superior to placebo but the magnitude of the effect of the surgical intervention over that of the placebo was generally small.” Overall, in 43% of the trials, the authors stated that there were no serious adverse events, although one trial didn’t report anything about adverse events. In the remaining 27 (51%) of trials, serious adverse events occurred in at least one study arm. They also noted that serious adverse events were reported in the placebo arm in 18 trials (34%) but that in many of these studies the adverse events were associated with the severity of the condition rather than the intervention itself, such as rebleeding after an attempt to stop bleeding using endoscopy, which happened in both treatment and placebo groups.

One key weakness of the study is that the chosen existing studies did not allow assessment of the magnitude of placebo effects, as only one study included an observational, “no treatment” group. The authors specifically did not include studies with wait list controls, for reasons that I find unclear. The authors state that “analysis of the placebo effect without controlling for non-specific effects of being in the trial would be flawed,” but that could be said about any analysis seeking to estimate the magnitude of placebo effects. You go with what you have, and for some reason these investigators chose not to, citing two Ted Kaptchuk papers as justification. (Yes, this Ted Kaptchuk.) I wish they had included such trials, even if the analysis ended up being somewhat “flawed.”

The authors note, as I have noted before, that the record of how physicians react to negative RCTs is mixed. (I myself have discussed this issue before.) This is how they put it:

The results of the placebo controlled surgical trials performed so far have had a varied impact on clinical care. With the exception of the trials on debridement for osteoarthritis or internal mammary artery ligation for angina, most of the trials did not result in a major change in practice. Moseley’s study on debridement for knee osteoarthritis was well received and resulted in limiting the recommendations for debridement and lavage for osteoarthritis of the knee to patients only with clear mechanical symptoms such as locking. The reaction of the medical community to the trials on tissue transplantation to treat Parkinson’s disease was also favourable. Although this treatment is not currently recommended, the need for more studies on mechanisms of disease and on tissue transplantation is recognised. These studies also provoked a discussion about ethical aspects of randomised clinical trials and placebo.

In contrast, the results of the trials on the efficacy of vertebroplasty were challenged and their authors were criticised for undermining the evidence supporting this commonly used procedure. The critics acknowledged that the injection of cement might be associated with many side effects, some of them potentially dangerous, but they argued that the treatment was justified because earlier unblinded trials had shown superiority of vertebroplasty over medical treatment. This argument against the validity of placebo controlled trials neglected any potential placebo effect of vertebroplasty.

It’s true, too. Just last fall, I noted that, five years after the resoundingly negative randomized, double-blinded clinical trials of vertebroplasty for osteoporotic vertebral fractures and nearly ten years after earlier trials suggesting that vertebroplasty does no better than placebo for such fractures, those with a vested interest in performing vertebroplasty continue to attack the studies their investigators. Indeed, I pointed out that, even though use of this procedure has decreased in the wake of negative RCTs and ultimately recommendations against it by the American Academy of Orthopaedic Surgeons, it hasn’t gone away by any means. I also pointed out how arguments for the procedure by certain radiologists were scientifically dubious and uncomfortably close to the sorts of arguments used by practitioners of “complementary and alternative medicine” who justify use of their modalities because they provoke placebo effects.

The authors conclude with a powerful argument in favor of more sham-controlled randomized trials of surgical procedures, arguing that they are as important in surgery as they are in medicine:

Placebo controlled trials in surgery are as important as they are in medicine, and they are justified in the same way. They are powerful, feasible way of showing the efficacy of surgical procedures. They are necessary to protect the welfare of present and future patients as well as to conduct proper cost effectiveness analyses. Only then may publicly funded surgical interventions be distributed fairly and justly. Without such studies ineffective treatment may continue unchallenged.

As surgery is inherently associated with some risk, it is important that the surgical treatment is truly effective and that the benefits outweigh the risks. Placebo controlled surgical trials are not free from adverse events but risks are generally minimal in well-designed trials and the control arm is much safer than the active treatment. However, this review highlighted the need for better reporting of trials, including serious adverse events and their relation to a particular element of surgical procedure.

A need exists to “demystify” and extend the use of the surgical placebo in clinical trials. These should result in a greater acceptance of this type of trial by the surgical community, ethics committees, funding bodies, and patients. In turn, this would lead to more studies, better guidelines on the design and reporting of studies, and a larger body of evidence about efficacy and the risks of surgical interventions. Placebo controlled surgical trials are highly informative and should be considered for selected procedures.

There is no doubt that carrying out randomized, sham-controlled clinical trials of surgical procedures presents challenges greater than the already formidable challenges of doing such trials in medicine. Neither is there any doubt that there will be a lot of procedures and interventions for which carrying out a sham-controlled RCT will be problematic from a standpoint of ethics and/or practicality. For these interventions, we will continue to have to rely on a combination of unblinded randomized trials and/or observational data. However, this review presents a conclusion that should not be a surprise to any surgeon: That there are a lot of procedures out there for which the evidence base is weak and that might not even do better than sham surgery. The only way to correct that is to carry out sham-controlled blinded randomized clinical trials whenever there is doubt for as many surgical procedures as is feasible. Then we surgeons have to pay attention to the results and act upon them.

And there are quite a few surgical interventions that could use this approach.